Image Classfication on “Heart Failure Cinical Records” Dataset#

Course Project, UC Irvine, Math 10, S24#

I would like to post my notebook on the course’s website. [Y/n] Y

from ucimlrepo import fetch_ucirepo

hf=fetch_ucirepo(

id=519

)

#url info about this dataset

{x:y for x,y in hf.metadata.items() if "URL" in x.upper()}

{'repository_url': 'https://archive.ics.uci.edu/dataset/519/heart+failure+clinical+records',

'data_url': 'https://archive.ics.uci.edu/static/public/519/data.csv'}

import pandas as pd

type(hf.data)

ucimlrepo.dotdict.dotdict

#ucimlrepo automatically split the data into X and y

#if I want to see the whole df, I have to put them back together

#hf_df=pd.concat([hf.data.features,hf.data.targets],axis=1)

#or

hf_df=pd.read_csv(dict(hf.metadata.items())["data_url"])

#list some data

hf_df[:5]

| age | anaemia | creatinine_phosphokinase | diabetes | ejection_fraction | high_blood_pressure | platelets | serum_creatinine | serum_sodium | sex | smoking | time | death_event | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 75.0 | 0 | 582 | 0 | 20 | 1 | 265000.00 | 1.9 | 130 | 1 | 0 | 4 | 1 |

| 1 | 55.0 | 0 | 7861 | 0 | 38 | 0 | 263358.03 | 1.1 | 136 | 1 | 0 | 6 | 1 |

| 2 | 65.0 | 0 | 146 | 0 | 20 | 0 | 162000.00 | 1.3 | 129 | 1 | 1 | 7 | 1 |

| 3 | 50.0 | 1 | 111 | 0 | 20 | 0 | 210000.00 | 1.9 | 137 | 1 | 0 | 7 | 1 |

| 4 | 65.0 | 1 | 160 | 1 | 20 | 0 | 327000.00 | 2.7 | 116 | 0 | 0 | 8 | 1 |

hf_df[-5:]

| age | anaemia | creatinine_phosphokinase | diabetes | ejection_fraction | high_blood_pressure | platelets | serum_creatinine | serum_sodium | sex | smoking | time | death_event | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 294 | 62.0 | 0 | 61 | 1 | 38 | 1 | 155000.0 | 1.1 | 143 | 1 | 1 | 270 | 0 |

| 295 | 55.0 | 0 | 1820 | 0 | 38 | 0 | 270000.0 | 1.2 | 139 | 0 | 0 | 271 | 0 |

| 296 | 45.0 | 0 | 2060 | 1 | 60 | 0 | 742000.0 | 0.8 | 138 | 0 | 0 | 278 | 0 |

| 297 | 45.0 | 0 | 2413 | 0 | 38 | 0 | 140000.0 | 1.4 | 140 | 1 | 1 | 280 | 0 |

| 298 | 50.0 | 0 | 196 | 0 | 45 | 0 | 395000.0 | 1.6 | 136 | 1 | 1 | 285 | 0 |

#I am seeing:

# dataset represent T/F as 1/0

# int was wrote as float

# vary big number in some col

#no missing values

hf_df[hf_df.isna().any(axis=1)]

| age | anaemia | creatinine_phosphokinase | diabetes | ejection_fraction | high_blood_pressure | platelets | serum_creatinine | serum_sodium | sex | smoking | time | death_event |

|---|

#around 32% dead (death_event=1)

sum(hf_df["death_event"])/len(hf_df["death_event"])

0.3210702341137124

Since the task is a binary classification problem, I will do Logistic Regression first.#

Logistic Regression without anything#

import numpy as np

import matplotlib.pyplot as plt

y_str="death_event"

y=hf_df[y_str]

X=hf_df[[x for x in list(hf_df.columns) if x!=y_str]]

import pandas as pd

import seaborn as sns

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression

from sklearn.preprocessing import LabelEncoder

from sklearn.metrics import accuracy_score, confusion_matrix

import matplotlib.pyplot as plt

from sklearn.preprocessing import StandardScaler

model_0=LogisticRegression(penalty=None,max_iter=10**10)

model_0.fit(X,y)

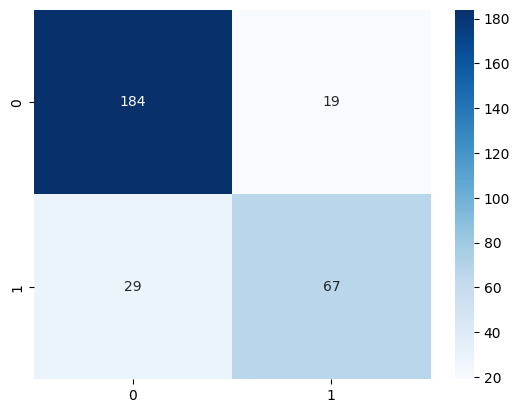

#this is the predict rate without any data spliting

model_0.score(X,y)

0.8394648829431438

def show_conf_mat(model,X,y):

sns.heatmap(

confusion_matrix(y,model.predict(X)),

annot=True,

fmt="d",

cmap="Blues",

xticklabels=model.classes_,

yticklabels=model.classes_,

)

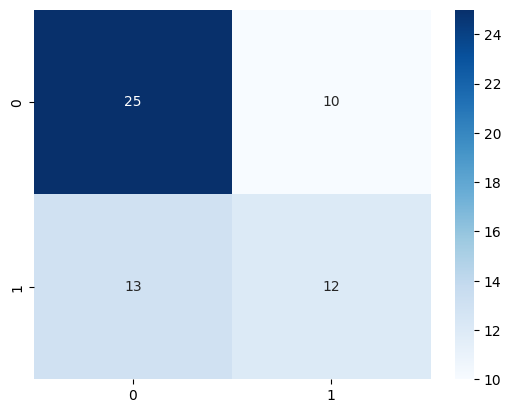

show_conf_mat(model_0,X,y)

Now I will split the data 8:2#

from sklearn.model_selection import train_test_split

SEED=42

#im tried to write X_train and X_test etc.

# my use of _l stand for learn(train), _t stand for test

X_l,X_t,y_l,y_t=train_test_split(X,y,test_size=0.2,random_state=SEED,shuffle=True)

model_1=LogisticRegression(penalty=None,max_iter=10**10)

model_1.fit(X_l,y_l)

#less than 2% acc was lost due to not knowing the full dataset

model_1.score(X_t,y_t),model_0.score(X_t,y_t)-model_1.score(X_t,y_t)

(0.8, -0.01666666666666672)

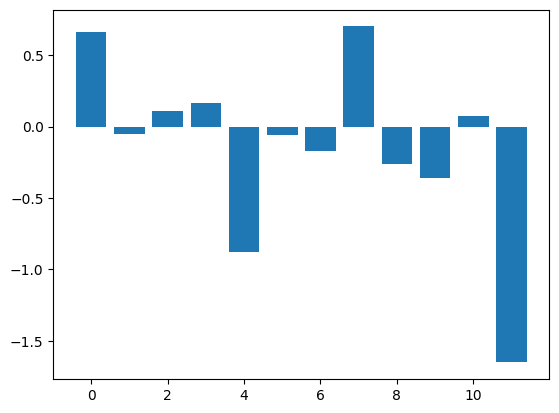

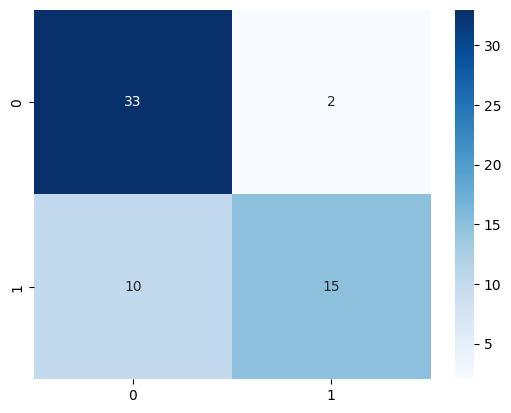

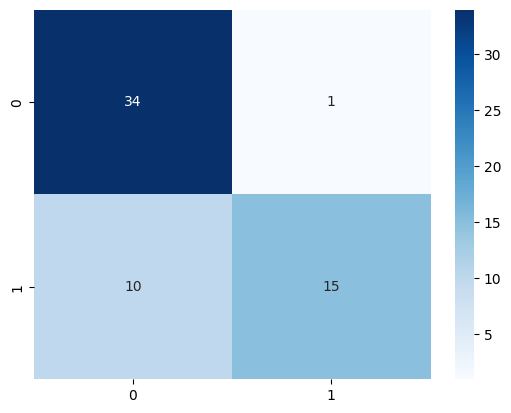

#There are a lot of FN

#which means that the model think a guy is dead but actually the guy survived

show_conf_mat(model_1,X_t,y_t)

Logistic Regression with Cross Validation#

k_vals=(2,11)

ks=[x for x in range(*k_vals)]

ks

[2, 3, 4, 5, 6, 7, 8, 9, 10]

from sklearn.model_selection import cross_val_score,KFold

model_2=LogisticRegression(penalty=None,max_iter=10**10)

best_info=[0]

for k in ks:

kf=KFold(

n_splits=k,

shuffle=True,

random_state=1

)

scores=cross_val_score(

model_2,

X_l,

y_l,

cv=kf,

)

best=max(scores)

if best>best_info[0]:

best_info=[best,k,list(scores).index(best)]

print(f"{k=} | {scores=}")

print(f"{scores.mean()=} | {np.std(scores)=}")

#as fold 10 getting around 92% is likely because of the pure luck

best_info

k=2 | scores=array([0.84166667, 0.78151261])

scores.mean()=0.8115896358543417 | np.std(scores)=0.030077030812324934

k=3 | scores=array([0.8375 , 0.775 , 0.7721519])

scores.mean()=0.7948839662447257 | np.std(scores)=0.030156510297425575

k=4 | scores=array([0.88333333, 0.85 , 0.78333333, 0.77966102])

scores.mean()=0.8240819209039548 | np.std(scores)=0.04420447035105028

k=5 | scores=array([0.875 , 0.83333333, 0.83333333, 0.79166667, 0.80851064])

scores.mean()=0.8283687943262411 | np.std(scores)=0.028160806711743997

k=6 | scores=array([0.875 , 0.825 , 0.825 , 0.775 , 0.825 ,

0.76923077])

scores.mean()=0.8157051282051282 | np.std(scores)=0.03557114760235953

k=7 | scores=array([0.85714286, 0.88235294, 0.79411765, 0.82352941, 0.82352941,

0.76470588, 0.82352941])

scores.mean()=0.8241296518607442 | np.std(scores)=0.03568274657311369

k=8 | scores=array([0.83333333, 0.9 , 0.83333333, 0.86666667, 0.73333333,

0.86666667, 0.73333333, 0.86206897])

scores.mean()=0.8285919540229885 | np.std(scores)=0.05843004129037457

k=9 | scores=array([0.81481481, 0.88888889, 0.81481481, 0.85185185, 0.85185185,

0.80769231, 0.76923077, 0.76923077, 0.84615385])

scores.mean()=0.8238366571699904 | np.std(scores)=0.03754491434144498

k=10 | scores=array([0.83333333, 0.91666667, 0.83333333, 0.875 , 0.83333333,

0.83333333, 0.79166667, 0.75 , 0.79166667, 0.82608696])

scores.mean()=0.8284420289855072 | np.std(scores)=0.043486185871937776

[0.9166666666666666, 10, 1]

Nerual Network With PyTorch#

!nvidia-smi

Wed Jun 12 20:07:40 2024

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 550.78 Driver Version: 550.78 CUDA Version: 12.4 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA GeForce RTX 4060 ... Off | 00000000:01:00.0 Off | N/A |

| N/A 42C P8 3W / 140W | 356MiB / 8188MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| 0 N/A N/A 2668 C python3 200MiB |

| 0 N/A N/A 3826 G /usr/bin/gnome-shell 2MiB |

| 0 N/A N/A 144898 C /home/vs/math10_final/.venv/bin/python 144MiB |

+-----------------------------------------------------------------------------------------+

import torch

from torch import nn

import matplotlib.pyplot as plt

import pandas as pd

torch.__version__

SEED=42

device="cuda" if torch.cuda.is_available() else "cpu"

device

'cuda'

#because there are 13 col, the model have to take 12 inputs

inp_size=len(hf_df.columns)-1

import pandas as pd

import torch

import torch.nn as nn

import torch.optim as optim

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

#normalize the data

scaler=StandardScaler()

X_l=scaler.fit_transform(

X_l

)

X_t=scaler.transform(X_t)

X_l,X_t

(array([[ 1.16420244, 1.13933179, -0.35037003, ..., 0.74293206,

-0.67625223, -1.56416577],

[ 1.16420244, -0.87770745, -0.50593309, ..., 0.74293206,

-0.67625223, 0.37989712],

[-0.03281933, 1.13933179, -0.50064183, ..., 0.74293206,

-0.67625223, 0.4950061 ],

...,

[-0.50609935, -0.87770745, 0.18087256, ..., 0.74293206,

-0.67625223, -0.56655455],

[-1.42476533, -0.87770745, 0.0052027 , ..., 0.74293206,

1.4787382 , 1.42866789],

[ 1.58177789, -0.87770745, 0.33961039, ..., 0.74293206,

1.4787382 , -0.57934444]]),

array([[ 7.46626996e-01, -8.77707451e-01, 5.20270419e-03,

-8.26497787e-01, 1.49345268e-01, -7.36162675e-01,

-2.24743345e+00, 1.32203359e+00, -9.44310219e-02,

7.42932064e-01, 1.47873820e+00, 1.50540721e+00],

[-9.23674793e-01, 1.13933179e+00, -2.95340912e-01,

-8.26497787e-01, -2.80697130e-01, -7.36162675e-01,

1.05843720e+00, -4.90853221e-01, 8.08329548e-01,

7.42932064e-01, 1.47873820e+00, 1.37750834e+00],

[-1.34125024e+00, -8.77707451e-01, 1.97355174e+00,

1.20992460e+00, -7.10739527e-01, -7.36162675e-01,

7.60802546e-01, -2.89421353e-01, 5.82639405e-01,

7.42932064e-01, -6.76252226e-01, -4.21691686e-02],

[ 1.58177789e+00, 1.13933179e+00, -4.80535042e-01,

-8.26497787e-01, -2.80697130e-01, 1.35839541e+00,

1.33481224e+00, 8.07000115e+00, -7.71501449e-01,

7.42932064e-01, 1.47873820e+00, -1.56416577e+00],

[-1.59179551e+00, -8.77707451e-01, -5.02758338e-01,

1.20992460e+00, 1.49345268e-01, -7.36162675e-01,

-2.70288943e-01, -1.88705419e-01, 8.08329548e-01,

7.42932064e-01, -6.76252226e-01, -7.45612977e-01],

[-9.23674793e-01, 1.13933179e+00, 5.01522972e-01,

1.20992460e+00, -7.10739527e-01, -7.36162675e-01,

-3.23437989e-01, -6.92285088e-01, -9.44310219e-02,

-1.34601809e+00, -6.76252226e-01, 1.45424766e+00],

[-8.85238982e-02, 1.13933179e+00, -4.47729225e-01,

-8.26497787e-01, -1.14078192e+00, -7.36162675e-01,

-5.57293791e-01, 3.14874250e-01, -3.20121164e-01,

7.42932064e-01, -6.76252226e-01, -6.43293878e-01],

[-1.34125024e+00, -8.77707451e-01, -3.01690425e-01,

1.20992460e+00, -2.80697130e-01, -7.36162675e-01,

6.24578408e+00, -8.79894853e-02, 1.25970983e+00,

7.42932064e-01, 1.47873820e+00, -5.66554553e-01],

[ 2.41692879e+00, 1.13933179e+00, -5.60962207e-01,

-8.26497787e-01, 1.49345268e-01, 1.35839541e+00,

-6.21072646e-01, 7.17737985e-01, -9.97191591e-01,

7.42932064e-01, 1.47873820e+00, -1.58974555e+00],

[-9.23674793e-01, 1.13933179e+00, -4.89001060e-01,

-8.26497787e-01, -1.57082432e+00, -7.36162675e-01,

-7.80519783e-01, -5.91569154e-01, 5.82639405e-01,

7.42932064e-01, -6.76252226e-01, 1.75258918e-01],

[ 7.46626996e-01, 1.13933179e+00, -5.48263181e-01,

-8.26497787e-01, 1.86951486e+00, -7.36162675e-01,

-7.89523783e-02, -2.89421353e-01, -9.44310219e-02,

-1.34601809e+00, -6.76252226e-01, -6.04924216e-01],

[ 1.33123262e+00, 1.13933179e+00, -1.68350652e-01,

-8.26497787e-01, 5.79387665e-01, -7.36162675e-01,

-4.19106272e-01, 4.15590184e-01, 1.93678026e+00,

7.42932064e-01, -6.76252226e-01, 6.10115090e-01],

[-2.55554077e-01, 1.13933179e+00, -4.57253494e-01,

-8.26497787e-01, -1.14078192e+00, -7.36162675e-01,

-4.61625508e-01, -1.88705419e-01, 1.31259120e-01,

7.42932064e-01, 1.47873820e+00, 4.82216216e-01],

[ 3.29051549e-01, 1.13933179e+00, -2.56185582e-01,

-8.26497787e-01, -2.80697130e-01, 1.35839541e+00,

-2.91548562e-01, -5.91569154e-01, -9.44310219e-02,

-1.34601809e+00, -6.76252226e-01, -1.57278155e-01],

[-8.85238982e-02, 1.13933179e+00, 3.16590085e-02,

-8.26497787e-01, 1.49345268e-01, -7.36162675e-01,

-4.93514936e-01, -7.93001022e-01, 3.56949263e-01,

7.42932064e-01, 1.47873820e+00, -1.00141073e+00],

[-1.34125024e+00, -8.77707451e-01, 7.53995816e+00,

1.20992460e+00, -1.14078192e+00, 1.35839541e+00,

1.35607186e+00, -3.90137287e-01, 5.82639405e-01,

7.42932064e-01, -6.76252226e-01, -9.24671401e-01],

[ 2.45536460e-01, -8.77707451e-01, 1.09308594e+00,

-8.26497787e-01, 1.86951486e+00, -7.36162675e-01,

-2.17139898e-01, -3.90137287e-01, 1.31259120e-01,

7.42932064e-01, -6.76252226e-01, -2.46807368e-01],

[ 1.62021370e-01, -8.77707451e-01, 3.79823973e-01,

-8.26497787e-01, -2.26716912e-02, -7.36162675e-01,

4.41908271e-01, -2.89421353e-01, -7.71501449e-01,

7.42932064e-01, 1.47873820e+00, -5.66554553e-01],

[-1.34125024e+00, -8.77707451e-01, 5.20270419e-03,

-8.26497787e-01, -2.80697130e-01, -7.36162675e-01,

1.30292281e+00, -3.90137287e-01, 1.93678026e+00,

7.42932064e-01, -6.76252226e-01, -9.11881514e-01],

[ 1.58177789e+00, -8.77707451e-01, 2.41192938e-01,

-8.26497787e-01, -2.26716912e-02, -7.36162675e-01,

9.89188559e-03, -2.89421353e-01, -5.45811307e-01,

7.42932064e-01, -6.76252226e-01, -2.97966917e-01],

[-8.85238982e-02, 1.13933179e+00, -3.66243808e-01,

1.20992460e+00, -1.14078192e+00, -7.36162675e-01,

-7.27370738e-01, 3.14874250e-01, 8.08329548e-01,

7.42932064e-01, -6.76252226e-01, -1.57278155e-01],

[-8.85238982e-02, -8.77707451e-01, -5.54612694e-01,

-8.26497787e-01, 1.00943006e+00, 1.35839541e+00,

2.50571706e-01, 9.19169853e-01, 1.48539997e+00,

-1.34601809e+00, -6.76252226e-01, -5.79344441e-01],

[ 2.45536460e-01, -8.77707451e-01, -4.59369999e-01,

-8.26497787e-01, -1.14078192e+00, -7.36162675e-01,

-1.74620661e-01, 1.01988579e+00, -3.20121164e-01,

7.42932064e-01, -6.76252226e-01, 1.04497126e+00],

[-8.40159703e-01, -8.77707451e-01, 8.49687936e-01,

-8.26497787e-01, -1.14078192e+00, 1.35839541e+00,

9.11245684e-02, -4.90853221e-01, -1.44857188e+00,

7.42932064e-01, -6.76252226e-01, -1.20604892e+00],

[ 7.46626996e-01, -8.77707451e-01, -6.43806969e-03,

1.20992460e+00, 5.79387665e-01, 1.35839541e+00,

-8.23039020e-01, -1.88705419e-01, 5.82639405e-01,

7.42932064e-01, 1.47873820e+00, -1.26999836e+00],

[-1.34125024e+00, -8.77707451e-01, 5.20270419e-03,

-8.26497787e-01, -2.26716912e-02, 1.35839541e+00,

1.69622575e+00, -5.91569154e-01, 1.31259120e-01,

-1.34601809e+00, -6.76252226e-01, 1.44145777e+00],

[ 7.46626996e-01, -8.77707451e-01, 2.24128954e+00,

1.20992460e+00, 1.49345268e-01, -7.36162675e-01,

-2.27769707e-01, -3.90137287e-01, 1.31259120e-01,

7.42932064e-01, -6.76252226e-01, 1.46703755e+00],

[-8.85238982e-02, 1.13933179e+00, -5.09107851e-01,

1.20992460e+00, 1.86951486e+00, 1.35839541e+00,

9.11245684e-02, -6.92285088e-01, -9.44310219e-02,

-1.34601809e+00, -6.76252226e-01, -4.89815229e-01],

[-1.09070497e+00, 1.13933179e+00, -4.72069025e-01,

1.20992460e+00, -7.10739527e-01, 1.35839541e+00,

-1.95880279e-01, 2.14158316e-01, -1.44857188e+00,

-1.34601809e+00, -6.76252226e-01, 7.76383627e-01],

[ 1.16420244e+00, -8.77707451e-01, -4.84768051e-01,

-8.26497787e-01, 1.00943006e+00, 1.35839541e+00,

-1.53361043e-01, -2.89421353e-01, 2.61385069e+00,

7.42932064e-01, -6.76252226e-01, 9.81021826e-01],

[-1.34125024e+00, -8.77707451e-01, 5.20270419e-03,

-8.26497787e-01, -2.08687520e+00, -7.36162675e-01,

-1.02500539e+00, -5.91569154e-01, -2.12564230e+00,

7.42932064e-01, -6.76252226e-01, -1.51300622e+00],

[-5.06099345e-01, 1.13933179e+00, -4.20214668e-01,

-8.26497787e-01, 5.79387665e-01, -7.36162675e-01,

9.89188559e-03, -2.08848606e-01, 1.31259120e-01,

7.42932064e-01, 1.47873820e+00, 1.00660160e+00],

[-9.23674793e-01, 1.13933179e+00, -4.42437964e-01,

1.20992460e+00, -7.10739527e-01, -7.36162675e-01,

4.20648653e-01, -1.88705419e-01, 3.56949263e-01,

-1.34601809e+00, -6.76252226e-01, -1.32115791e+00],

[ 1.16420244e+00, -8.77707451e-01, 5.20270419e-03,

1.20992460e+00, -7.10739527e-01, 1.35839541e+00,

9.89188559e-03, 4.45804964e-01, -5.45811307e-01,

-1.34601809e+00, -6.76252226e-01, -1.39789724e+00],

[-9.23674793e-01, -8.77707451e-01, 5.20270419e-03,

1.20992460e+00, -2.26716912e-02, -7.36162675e-01,

5.05687126e-01, 5.16306118e-01, -3.20121164e-01,

7.42932064e-01, 1.47873820e+00, -1.24441859e+00],

[-8.85238982e-02, 1.13933179e+00, -2.77350625e-01,

1.20992460e+00, 1.86951486e+00, -7.36162675e-01,

2.03637965e+00, -2.89421353e-01, -1.22288173e+00,

7.42932064e-01, 1.47873820e+00, -1.56416577e+00],

[ 7.46626996e-01, 1.13933179e+00, -4.59369999e-01,

-8.26497787e-01, 1.86951486e+00, -7.36162675e-01,

9.41509302e-01, -8.79894853e-02, 1.31259120e-01,

-1.34601809e+00, -6.76252226e-01, -5.40974778e-01],

[-1.34125024e+00, -8.77707451e-01, 5.20270419e-03,

-8.26497787e-01, -1.57082432e+00, 1.35839541e+00,

-1.45019776e+00, 2.14158316e-01, -3.20121164e-01,

7.42932064e-01, -6.76252226e-01, 6.10115090e-01],

[-5.06099345e-01, -8.77707451e-01, -5.47204929e-01,

-8.26497787e-01, -2.80697130e-01, -7.36162675e-01,

-3.65957226e-01, -1.88705419e-01, -3.20121164e-01,

7.42932064e-01, 1.47873820e+00, -5.40974778e-01],

[-1.59179551e+00, -8.77707451e-01, 5.20270419e-03,

-8.26497787e-01, 1.86951486e+00, -7.36162675e-01,

9.89188559e-03, -2.08848606e-01, 1.31259120e-01,

-1.34601809e+00, -6.76252226e-01, -6.43293878e-01],

[-8.85238982e-02, 1.13933179e+00, -5.60962207e-01,

-8.26497787e-01, -1.57082432e+00, -7.36162675e-01,

-6.21072646e-01, -6.92285088e-01, 5.82639405e-01,

7.42932064e-01, 1.47873820e+00, -7.58402865e-01],

[-8.85238982e-02, 1.13933179e+00, -5.30272894e-01,

1.20992460e+00, -1.14078192e+00, -7.36162675e-01,

-7.06111119e-01, 1.12060172e+00, -9.97191591e-01,

-1.34601809e+00, -6.76252226e-01, -7.07243315e-01],

[ 3.29051549e-01, 1.13933179e+00, -4.91117564e-01,

1.20992460e+00, 1.86951486e+00, 1.35839541e+00,

-6.31702455e-01, -4.90853221e-01, 8.08329548e-01,

-1.34601809e+00, -6.76252226e-01, -4.89815229e-01],

[ 7.46626996e-01, -8.77707451e-01, -5.13340859e-01,

-8.26497787e-01, 1.86951486e+00, 1.35839541e+00,

5.80095790e-01, -5.91569154e-01, 8.08329548e-01,

-1.34601809e+00, 1.47873820e+00, -7.45612977e-01],

[-6.73129524e-01, 1.13933179e+00, -3.24971973e-01,

1.20992460e+00, -2.80697130e-01, -7.36162675e-01,

-3.76587035e-01, 2.02704512e+00, 1.93678026e+00,

7.42932064e-01, -6.76252226e-01, -3.49126467e-01],

[ 3.29051549e-01, -8.77707451e-01, -4.33971947e-01,

-8.26497787e-01, -7.10739527e-01, -7.36162675e-01,

-3.64331417e-02, -5.91569154e-01, 3.56949263e-01,

-1.34601809e+00, -6.76252226e-01, 6.86854415e-01],

[ 7.46626996e-01, -8.77707451e-01, -3.86350599e-01,

1.20992460e+00, -1.82884976e+00, 1.35839541e+00,

1.34544205e+00, -3.90137287e-01, -9.44310219e-02,

7.42932064e-01, 1.47873820e+00, 7.12434190e-01],

[ 3.29051549e-01, -8.77707451e-01, 3.33260877e-01,

1.20992460e+00, -2.80697130e-01, -7.36162675e-01,

9.89188559e-03, -2.89421353e-01, 1.25970983e+00,

-1.34601809e+00, -6.76252226e-01, 1.58214654e+00],

[-5.06099345e-01, -8.77707451e-01, 5.20270419e-03,

1.20992460e+00, -2.80697130e-01, 1.35839541e+00,

1.15410549e+00, -6.92285088e-01, 8.08329548e-01,

-1.34601809e+00, -6.76252226e-01, 8.27543177e-01],

[ 1.62021370e-01, 1.13933179e+00, -4.81593294e-01,

1.20992460e+00, 1.86951486e+00, -7.36162675e-01,

4.86053317e-02, -1.88705419e-01, 1.93678026e+00,

7.42932064e-01, -6.76252226e-01, 1.88048805e-01],

[ 9.13657175e-01, 1.13933179e+00, 3.87231738e-01,

-8.26497787e-01, -1.14078192e+00, 1.35839541e+00,

8.03321783e-01, 3.14874250e-01, 5.82639405e-01,

7.42932064e-01, 1.47873820e+00, -2.72387142e-01],

[-9.23674793e-01, -8.77707451e-01, 5.20270419e-03,

-8.26497787e-01, 1.00943006e+00, -7.36162675e-01,

-1.16319291e+00, -7.93001022e-01, -5.45811307e-01,

-1.34601809e+00, -6.76252226e-01, 5.07795991e-01],

[-1.72038988e-01, 1.13933179e+00, -3.14389451e-01,

1.20992460e+00, -1.14078192e+00, 1.35839541e+00,

4.20648653e-01, -3.90137287e-01, 1.03401969e+00,

-1.34601809e+00, -6.76252226e-01, -6.94453428e-01],

[-1.75882569e+00, -8.77707451e-01, 4.96492954e-02,

-8.26497787e-01, -2.80697130e-01, -7.36162675e-01,

4.10018843e-01, -3.90137287e-01, 1.25970983e+00,

7.42932064e-01, 1.47873820e+00, 1.04497126e+00],

[ 7.46626996e-01, -8.77707451e-01, 5.20270419e-03,

1.20992460e+00, -2.26716912e-02, -7.36162675e-01,

-2.52274551e+00, -2.89421353e-01, 8.08329548e-01,

7.42932064e-01, -6.76252226e-01, 1.45424766e+00],

[ 3.29051549e-01, -8.77707451e-01, -3.73651573e-01,

1.20992460e+00, 1.00943006e+00, -7.36162675e-01,

-1.20571215e+00, -8.79894853e-02, 1.31259120e-01,

7.42932064e-01, 1.47873820e+00, -7.71192752e-01],

[-5.89614435e-01, -8.77707451e-01, 5.20270419e-03,

1.20992460e+00, -2.26716912e-02, -7.36162675e-01,

1.67159042e-02, 4.15590184e-01, -5.45811307e-01,

7.42932064e-01, -6.76252226e-01, 1.03218138e+00],

[ 1.58177789e+00, -8.77707451e-01, -4.54078738e-01,

1.20992460e+00, -2.26716912e-02, -7.36162675e-01,

-1.20571215e+00, 5.16306118e-01, 1.71109012e+00,

7.42932064e-01, 1.47873820e+00, -1.39789724e+00],

[-1.00718988e+00, -8.77707451e-01, 4.17921051e-01,

1.20992460e+00, -2.80697130e-01, 1.35839541e+00,

5.92351409e-02, -5.91569154e-01, -1.44857188e+00,

-1.34601809e+00, -6.76252226e-01, 6.99644302e-01],

[ 9.13657175e-01, -8.77707451e-01, -2.25496269e-01,

1.20992460e+00, -1.57082432e+00, 1.35839541e+00,

-8.95821875e-02, -8.79894853e-02, -9.44310219e-02,

7.42932064e-01, 1.47873820e+00, -9.37461289e-01]]))

X_l=torch.tensor(X_l,dtype=torch.float32).to(device)

y_l=torch.tensor(y_l,dtype=torch.float32).to(device).unsqueeze(1)

X_t = torch.tensor(X_t, dtype=torch.float32).unsqueeze(1).to(device)

y_t = torch.tensor(y_t.to_numpy(), dtype=torch.float32).unsqueeze(1).to(device)

class HeartFailureNN(nn.Module):

def __init__(self):

super(HeartFailureNN, self).__init__()

self.layer1 = nn.Linear(inp_size, 32)

self.layer2 = nn.Linear(32, 16)

self.output = nn.Linear(16, 1)

self.relu = nn.ReLU()

self.sigmoid = nn.Sigmoid()

def forward(self, x):

x = self.relu(self.layer1(x))

x = self.relu(self.layer2(x))

x = self.sigmoid(self.output(x))

return x

model_3=HeartFailureNN().to(device)

model_3.state_dict()

OrderedDict([('layer1.weight',

tensor([[ 2.2922e-01, 1.7116e-01, -9.3077e-02, -9.1616e-02, 2.2275e-01,

-2.3007e-01, 3.1890e-02, 1.7748e-01, 1.8381e-01, -1.3053e-01,

-1.4603e-02, 4.1606e-02],

[-2.8085e-01, -1.0538e-01, 1.8502e-01, -1.7459e-02, 1.9211e-01,

-4.4997e-02, -1.6611e-01, -2.4092e-01, 2.4254e-01, -1.3782e-01,

-7.3709e-02, 4.9440e-02],

[ 2.0098e-02, -6.7884e-02, 1.2450e-02, -2.4829e-01, 1.5804e-01,

2.2427e-01, -7.8982e-02, -2.0919e-01, 2.7983e-01, -3.3181e-02,

8.7102e-02, 1.2963e-01],

[ 2.5613e-01, -4.7729e-02, -8.6461e-02, 2.5679e-01, 2.8597e-01,

1.5136e-01, 2.8460e-01, 1.5277e-01, -5.8291e-02, 2.1879e-01,

2.4158e-02, 2.2968e-01],

[ 4.1753e-02, 1.7969e-01, 4.8242e-02, 4.6568e-02, -2.0279e-01,

-2.4347e-01, -2.4704e-02, -2.6502e-01, -1.4418e-01, 1.7965e-01,

-2.3586e-01, 8.1565e-02],

[-2.7891e-01, 1.7206e-01, -2.5785e-02, -2.4873e-01, 2.8244e-01,

-2.4949e-01, 1.6958e-01, -2.7877e-01, 9.8072e-02, 1.0169e-01,

-6.7338e-02, 1.1296e-01],

[ 1.2056e-02, 1.3713e-01, 1.2473e-01, -1.8328e-01, 1.5901e-02,

4.2842e-02, 1.8066e-01, 1.4241e-01, -1.3926e-02, -2.0259e-01,

-2.0861e-01, 2.9812e-02],

[-1.8504e-01, -4.4055e-02, 2.0949e-01, 2.7394e-02, -2.6404e-01,

-2.2627e-01, 1.3557e-01, -2.3805e-01, 1.6995e-01, -1.9433e-01,

-2.6464e-01, -2.2572e-02],

[ 1.1152e-01, -1.9490e-01, 1.2100e-01, 3.3853e-02, 5.8822e-02,

1.3180e-01, 3.8797e-02, 1.8920e-01, 2.5211e-01, -1.4696e-01,

1.4074e-01, 1.4404e-01],

[ 2.2004e-01, 1.5028e-01, -8.7056e-02, 9.4089e-02, -2.7881e-01,

2.6406e-01, 7.4161e-03, -1.0224e-01, -2.5340e-01, -1.9560e-01,

-2.0781e-01, -2.2178e-01],

[ 1.3610e-01, -6.1982e-02, 1.2046e-01, -1.0912e-01, 1.8858e-01,

1.7452e-01, 1.3999e-01, 6.1097e-02, 5.6583e-03, 1.4405e-01,

-2.4902e-02, -7.5582e-02],

[ 2.6280e-01, -7.6948e-02, -2.2195e-01, -2.3445e-01, -2.0777e-01,

-1.2450e-02, 2.2259e-01, -7.5717e-02, 1.0698e-01, 5.6350e-03,

1.9516e-01, 1.4356e-01],

[ 1.5255e-01, -1.1269e-01, 2.2399e-01, 2.2950e-01, -1.8591e-02,

-1.4937e-01, 2.4557e-01, -4.1247e-02, -1.7470e-02, 1.9159e-01,

-2.8817e-01, -2.0290e-01],

[-1.0617e-01, -4.1614e-02, -1.1760e-01, -3.2803e-02, -2.7631e-01,

2.3587e-01, -2.5664e-01, 1.7575e-01, -2.1476e-01, 1.2556e-01,

-2.0557e-01, 2.6747e-01],

[-4.7474e-02, -1.6228e-01, 1.4995e-01, -2.5735e-01, -2.5603e-02,

1.3204e-01, -5.8533e-02, 2.8483e-01, 2.1777e-02, 1.7812e-01,

-1.3998e-01, 1.6854e-02],

[ 1.4044e-01, 2.7219e-02, -2.6933e-01, 1.1827e-01, -2.0488e-01,

-1.6318e-01, 2.7501e-01, 6.2147e-03, 3.5243e-02, -1.3790e-01,

2.5126e-01, -4.6583e-02],

[ 5.1457e-02, 2.2490e-01, -1.6779e-01, -7.2829e-02, 2.7296e-01,

2.8333e-01, -7.0932e-02, -1.1297e-01, -1.0611e-01, -8.2073e-02,

-1.8777e-01, -2.3319e-01],

[ 1.4356e-01, 1.1685e-01, -1.4809e-01, 4.6295e-02, -2.8786e-01,

-2.8156e-01, 2.6534e-01, 1.5115e-01, 1.0951e-01, -5.4767e-02,

-1.5352e-03, 8.4666e-02],

[ 9.1542e-02, 1.5486e-06, -1.5656e-01, -4.6717e-02, 1.7426e-01,

1.2075e-01, -1.6180e-01, -2.6076e-01, 1.5258e-01, -1.1592e-01,

-1.3743e-02, 8.6743e-02],

[ 2.2532e-01, -1.6604e-01, -2.5131e-01, 1.3629e-01, 2.5422e-02,

-1.7199e-01, 8.9597e-02, 1.3128e-01, -1.6143e-01, 2.6216e-01,

-3.7319e-02, 1.9186e-01],

[ 1.1678e-01, 2.4223e-01, -1.4849e-01, 1.7106e-01, -3.5642e-02,

-2.6884e-01, -1.9576e-01, 7.6277e-02, -2.8300e-02, 2.0703e-01,

-1.8745e-01, -2.4719e-02],

[-1.3567e-01, 5.6176e-03, -2.2379e-01, -1.7211e-01, 4.6289e-02,

1.1340e-01, -9.4377e-03, 2.6884e-01, -7.7984e-02, 1.4181e-01,

1.6457e-01, 1.5838e-01],

[ 1.5180e-01, -2.3071e-01, -2.1298e-01, -1.1716e-01, 1.8801e-01,

-4.1196e-02, 8.4357e-02, 2.7259e-01, -2.7399e-01, 1.7135e-01,

1.9712e-01, -7.8625e-02],

[-1.4490e-01, 1.0419e-01, 1.2527e-01, 6.3795e-02, 1.9840e-01,

-2.9285e-03, -2.0880e-02, -2.7633e-01, -4.5022e-03, 2.6675e-01,

7.6740e-02, -7.6956e-02],

[-2.0576e-02, 1.4079e-01, -2.2443e-01, -2.5425e-01, -2.1205e-01,

-2.3505e-01, 5.1943e-02, 1.0504e-01, 1.0069e-01, 2.5194e-01,

2.2699e-01, 1.8161e-01],

[ 2.6235e-01, 3.1063e-02, -4.2365e-02, 6.6400e-02, 6.8451e-02,

1.4366e-01, 2.4748e-01, -1.4717e-01, -2.3817e-01, -1.4089e-01,

1.8282e-01, -1.5904e-01],

[ 1.8596e-01, -2.1978e-01, 3.8548e-02, -1.4320e-01, -1.7156e-02,

1.2213e-01, -1.1416e-01, 2.0357e-01, -6.3323e-03, -2.2644e-01,

1.5494e-01, -2.5748e-01],

[-2.4320e-01, 2.2262e-01, 1.5010e-01, -2.3336e-01, 5.1623e-02,

-2.4053e-01, -1.1564e-01, 1.3193e-01, 1.0731e-01, -9.0276e-02,

2.9072e-02, 1.6025e-01],

[ 2.7049e-01, 5.2668e-02, 2.7311e-01, 9.5785e-02, -7.9667e-02,

1.1482e-01, -1.2378e-01, 4.8536e-02, -1.0620e-02, -9.0178e-02,

1.5000e-02, 1.7173e-01],

[-1.3140e-01, -2.5672e-02, -2.6276e-01, -1.5423e-01, -1.8655e-01,

1.3370e-01, 1.6982e-01, -4.5856e-02, 2.1548e-01, 1.8413e-01,

-1.5558e-01, 1.3998e-01],

[ 1.7256e-01, -2.0840e-01, 1.3721e-02, -9.6935e-02, 1.0276e-01,

-4.1685e-02, 3.5669e-02, 1.8701e-02, -2.7238e-01, 2.5471e-01,

-7.5701e-02, 7.7424e-02],

[ 6.9836e-02, -2.6389e-02, -7.1167e-02, 2.4586e-01, 9.9125e-03,

-2.9470e-02, -9.3469e-03, -1.0000e-01, -2.5670e-01, 2.0543e-01,

-2.5952e-01, 1.3137e-01]], device='cuda:0')),

('layer1.bias',

tensor([-0.0886, 0.0411, 0.0627, 0.1634, -0.1887, -0.2666, 0.1526, 0.1626,

0.2846, -0.0813, -0.2582, -0.1629, 0.0146, -0.1577, -0.1936, 0.1467,

-0.2726, -0.0552, 0.0364, 0.2366, -0.0942, 0.0444, 0.0127, 0.0200,

0.2252, 0.1151, 0.1152, 0.1540, 0.1302, -0.2413, 0.1168, 0.1385],

device='cuda:0')),

('layer2.weight',

tensor([[-0.1200, -0.1599, -0.0756, -0.0413, -0.0395, 0.1582, 0.1190, -0.0294,

-0.0567, 0.1274, -0.0705, -0.0731, -0.1281, 0.0982, -0.0276, -0.0380,

0.0866, 0.1285, -0.0095, 0.0941, -0.0772, 0.0654, -0.0094, 0.0631,

-0.0913, 0.0569, 0.1150, -0.0016, -0.1309, -0.0459, -0.1622, 0.1050],

[ 0.1653, 0.0329, 0.0938, 0.0355, 0.1335, 0.0054, 0.1169, -0.0805,

0.0027, 0.0356, 0.1192, -0.0156, -0.1356, 0.0262, 0.0374, -0.0864,

-0.0993, 0.0979, 0.1555, 0.1299, 0.1344, -0.0099, -0.1681, 0.1642,

-0.1376, -0.1281, 0.1436, -0.0401, 0.0227, -0.0009, -0.0044, -0.1481],

[-0.0668, -0.1125, 0.0166, -0.1002, -0.0752, 0.1394, 0.0593, 0.0251,

0.0758, 0.1550, 0.0041, -0.1480, -0.1275, 0.0522, -0.0483, 0.0484,

0.1500, -0.1423, -0.1336, 0.0881, 0.1662, 0.0474, 0.1068, 0.1420,

0.1177, 0.0158, 0.0319, 0.0524, 0.0569, 0.0741, -0.0486, 0.0786],

[ 0.0252, 0.1572, -0.0705, -0.0931, 0.1671, -0.0457, -0.0854, -0.1197,

-0.1355, 0.0841, -0.0034, 0.0502, 0.0421, -0.1732, 0.0733, 0.1073,

0.0112, -0.1413, -0.1222, -0.0768, 0.0511, 0.0621, -0.1556, 0.1066,

0.1100, 0.0768, 0.1635, 0.0179, -0.0088, -0.0205, 0.0612, 0.0782],

[ 0.0715, -0.0172, -0.0122, 0.0707, 0.0390, -0.1061, -0.1661, 0.0072,

0.1611, -0.1321, -0.1448, -0.0905, 0.1153, -0.0320, -0.1605, 0.1312,

0.1116, 0.0865, -0.0338, 0.0499, -0.0574, 0.1269, -0.0373, 0.0309,

-0.1501, 0.0818, -0.0887, 0.0963, -0.0882, 0.0935, 0.1609, -0.1361],

[ 0.1139, -0.1413, -0.0169, 0.0744, -0.0119, -0.1395, 0.0333, 0.1270,

0.0699, -0.0093, 0.0098, -0.1335, -0.1258, 0.0939, -0.0403, -0.0241,

-0.0402, -0.0788, 0.0787, -0.0099, -0.1139, -0.0522, 0.1471, -0.0463,

-0.0187, 0.1732, 0.0557, 0.1283, 0.0961, -0.1151, -0.1534, 0.1647],

[ 0.0736, -0.0811, 0.0689, 0.1484, 0.1643, -0.1641, -0.1074, 0.0913,

-0.1672, 0.0883, 0.1398, -0.1739, -0.1733, -0.0080, 0.0580, 0.0641,

0.1523, 0.0849, -0.0426, 0.0251, -0.0755, 0.1624, -0.1509, 0.0228,

0.0767, -0.0349, 0.1679, 0.0514, 0.0210, -0.0050, 0.0468, -0.0994],

[-0.1709, 0.0605, -0.0884, -0.1126, 0.0710, -0.0542, -0.1277, -0.1406,

0.0619, 0.1300, 0.0114, -0.0858, -0.1760, 0.1100, 0.1620, -0.0190,

0.1079, -0.0210, 0.0166, 0.0517, 0.0362, 0.0122, 0.1283, 0.0569,

-0.0151, -0.1619, -0.1623, 0.1598, 0.0877, -0.0579, -0.1021, 0.1557],

[ 0.1305, 0.0847, 0.0191, -0.0901, -0.1484, 0.0859, -0.0319, 0.0995,

0.1637, 0.1125, 0.1144, 0.0235, -0.1365, -0.0243, 0.0778, 0.0221,

-0.1302, 0.0673, 0.0692, 0.1442, 0.1448, -0.0758, -0.1609, 0.1482,

0.0878, 0.0717, -0.0996, -0.0546, 0.1631, -0.0003, 0.1518, 0.0770],

[ 0.1576, -0.1459, 0.1297, 0.0037, 0.0940, -0.1616, -0.1626, -0.0021,

-0.1095, -0.1695, -0.0439, -0.0440, 0.1559, -0.0678, 0.0791, -0.0803,

0.0078, -0.0874, 0.0428, 0.0132, 0.0751, 0.1143, 0.1100, 0.0071,

-0.0436, 0.1019, -0.1277, -0.0024, 0.0686, -0.1135, 0.1214, -0.0527],

[ 0.0233, -0.0951, -0.0870, -0.0718, 0.0893, 0.0462, 0.1386, 0.0880,

0.1489, -0.0890, -0.1475, 0.0149, 0.1704, 0.0517, -0.1670, 0.1112,

0.1720, -0.0661, 0.0366, 0.0257, 0.1721, -0.1119, -0.0336, 0.0573,

0.1013, 0.0042, -0.0609, -0.0770, 0.1296, 0.1278, 0.0783, 0.0041],

[ 0.0905, 0.0185, -0.1118, 0.1594, -0.0095, -0.1602, -0.0506, -0.1600,

-0.1473, -0.1479, 0.1435, -0.0713, -0.0330, 0.0823, -0.1583, 0.1335,

0.0530, 0.1464, -0.0603, -0.0237, -0.0716, -0.0875, 0.0881, -0.0555,

-0.0630, 0.1403, 0.1479, -0.0418, -0.0494, 0.0203, -0.0287, 0.0595],

[ 0.0133, 0.0639, -0.1751, -0.0269, -0.0603, 0.0576, -0.1172, 0.0585,

-0.0008, 0.0350, 0.0535, 0.0436, 0.0142, 0.0992, 0.1667, 0.0452,

-0.1476, 0.0541, -0.1494, 0.0383, 0.1417, 0.1130, -0.1272, 0.1477,

0.1555, 0.1440, 0.0990, -0.0458, -0.0281, 0.1287, 0.0152, 0.1150],

[ 0.0477, 0.0229, 0.1208, 0.1343, 0.1342, 0.0723, 0.1572, -0.1351,

0.1629, 0.1058, -0.1342, -0.0915, -0.0103, -0.1656, 0.0845, 0.0597,

0.0976, 0.0563, 0.0244, -0.1183, 0.1239, 0.1361, -0.1361, 0.1625,

0.0588, 0.0150, 0.1604, 0.0179, -0.1611, -0.0998, -0.0942, -0.1552],

[ 0.1484, 0.1718, -0.0962, -0.0719, -0.1379, -0.0358, 0.1151, -0.1218,

-0.0922, -0.1632, -0.0489, 0.1725, 0.1094, -0.0046, 0.0734, 0.1527,

0.0993, 0.1538, -0.0794, -0.1512, -0.0762, -0.1732, -0.0082, 0.1076,

0.1149, 0.0343, 0.0058, 0.0125, 0.0320, -0.1636, 0.0797, -0.1142],

[ 0.0346, -0.0631, -0.0095, -0.1010, -0.0787, -0.0316, -0.1295, -0.0914,

0.1307, 0.0124, -0.0303, -0.0649, 0.0121, 0.0707, -0.0673, -0.1432,

-0.0561, -0.1000, 0.1616, 0.0075, 0.0032, 0.0116, -0.0566, -0.1495,

-0.1125, -0.0638, -0.1213, 0.0666, 0.0953, -0.1432, -0.0619, -0.0544]],

device='cuda:0')),

('layer2.bias',

tensor([-0.0021, -0.1047, -0.0269, -0.0528, 0.0936, 0.0146, 0.0897, -0.1447,

-0.1727, -0.1554, -0.0019, -0.0623, -0.1504, 0.0433, -0.0143, 0.0358],

device='cuda:0')),

('output.weight',

tensor([[-0.1879, -0.0180, 0.2389, 0.0558, 0.2346, 0.2328, 0.0621, 0.0352,

0.1764, 0.0967, -0.2126, -0.1112, 0.2139, -0.0339, 0.1071, -0.1764]],

device='cuda:0')),

('output.bias', tensor([0.1674], device='cuda:0'))])

with torch.inference_mode():

untrained_y_pred=model_3(X_t)

untrained_y_pred

tensor([[[0.6110]],

[[0.5938]],

[[0.5459]],

[[0.6435]],

[[0.5528]],

[[0.5590]],

[[0.5610]],

[[0.5899]],

[[0.5955]],

[[0.5637]],

[[0.5577]],

[[0.5635]],

[[0.5780]],

[[0.5533]],

[[0.5728]],

[[0.5615]],

[[0.5605]],

[[0.5734]],

[[0.5577]],

[[0.5487]],

[[0.5576]],

[[0.5514]],

[[0.5855]],

[[0.5635]],

[[0.5745]],

[[0.5457]],

[[0.5580]],

[[0.5566]],

[[0.5659]],

[[0.5654]],

[[0.5638]],

[[0.5829]],

[[0.5533]],

[[0.5739]],

[[0.5661]],

[[0.5917]],

[[0.5614]],

[[0.5822]],

[[0.5721]],

[[0.5491]],

[[0.5701]],

[[0.5642]],

[[0.5611]],

[[0.5734]],

[[0.5728]],

[[0.5537]],

[[0.5878]],

[[0.5758]],

[[0.5642]],

[[0.5578]],

[[0.5675]],

[[0.5485]],

[[0.5609]],

[[0.5872]],

[[0.5628]],

[[0.5678]],

[[0.5670]],

[[0.5647]],

[[0.5765]],

[[0.5780]]], device='cuda:0')

def show_conf_mat(model,X,y):

sns.heatmap(

confusion_matrix(y.to("cpu").squeeze().detach().numpy(),torch.round(torch.sigmoid(model(X))).to("cpu").squeeze().detach().numpy()),

annot=True,

fmt="d",

cmap="Blues",

)

show_conf_mat(model_3,X_t,y_t)

torch.round(untrained_y_pred)

tensor([[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]],

[[1.]]], device='cuda:0')

y_t

tensor([[0.],

[0.],

[1.],

[1.],

[0.],

[0.],

[1.],

[0.],

[1.],

[0.],

[0.],

[1.],

[1.],

[0.],

[0.],

[1.],

[0.],

[0.],

[1.],

[1.],

[0.],

[0.],

[0.],

[1.],

[1.],

[0.],

[0.],

[0.],

[1.],

[0.],

[1.],

[0.],

[0.],

[1.],

[1.],

[1.],

[1.],

[1.],

[0.],

[0.],

[1.],

[1.],

[0.],

[0.],

[0.],

[0.],

[0.],

[0.],

[0.],

[0.],

[1.],

[1.],

[1.],

[0.],

[0.],

[0.],

[0.],

[1.],

[0.],

[1.]], device='cuda:0')

#had sigmoid ready

loss_fn=nn.BCELoss()

#for binary optim

opt=optim.Adam(model_3.parameters(),lr=0.001)

# Training loop

num_epochs = 50000

for epoch in range(num_epochs):

model_3.train()

opt.zero_grad()

outputs = model_3(X_l)

#print(y_l.shape,outputs.shape)

loss = loss_fn(outputs, y_l)

loss.backward()

opt.step()

if (epoch+1) % 200 == 0:

model_3.eval()

with torch.inference_mode():

y_pred=model_3(X_t).squeeze(1)

t_loss=loss_fn(y_pred,y_t)

t_acc=(torch.round(torch.sigmoid(y_pred))==y_t).sum()/len(y_t)

print(f'Epoch [{epoch+1}/{num_epochs}]| Loss: {loss.item()} | Test loss: {t_loss} | Test acc: {t_acc}')

Epoch [200/50000]| Loss: 0.20615744590759277 | Test loss: 0.6159399747848511 | Test acc: 0.4166666865348816

Epoch [400/50000]| Loss: 0.03982704505324364 | Test loss: 1.3452800512313843 | Test acc: 0.4166666865348816

Epoch [600/50000]| Loss: 0.009808346629142761 | Test loss: 1.9476165771484375 | Test acc: 0.4166666865348816

Epoch [800/50000]| Loss: 0.003914010711014271 | Test loss: 2.308140754699707 | Test acc: 0.4166666865348816

Epoch [1000/50000]| Loss: 0.0019957765471190214 | Test loss: 2.579430341720581 | Test acc: 0.4333333671092987

Epoch [1200/50000]| Loss: 0.0012006012257188559 | Test loss: 4.156387805938721 | Test acc: 0.46666669845581055

Epoch [1400/50000]| Loss: 0.0007950253202579916 | Test loss: 4.303135395050049 | Test acc: 0.5

Epoch [1600/50000]| Loss: 0.0005603853496722877 | Test loss: 4.433218955993652 | Test acc: 0.5

Epoch [1800/50000]| Loss: 0.000412351539125666 | Test loss: 4.549786567687988 | Test acc: 0.5333333611488342

Epoch [2000/50000]| Loss: 0.00031356673571281135 | Test loss: 4.654916763305664 | Test acc: 0.550000011920929

Epoch [2200/50000]| Loss: 0.0002446570142637938 | Test loss: 4.75088357925415 | Test acc: 0.550000011920929

Epoch [2400/50000]| Loss: 0.00019458652241155505 | Test loss: 4.8414716720581055 | Test acc: 0.550000011920929

Epoch [2600/50000]| Loss: 0.00015712043386884034 | Test loss: 4.916953086853027 | Test acc: 0.5333333611488342

Epoch [2800/50000]| Loss: 0.00012732668255921453 | Test loss: 6.390190124511719 | Test acc: 0.5333333611488342

Epoch [3000/50000]| Loss: 0.00010508887498872355 | Test loss: 6.458841323852539 | Test acc: 0.5166667103767395

Epoch [3200/50000]| Loss: 8.776828326517716e-05 | Test loss: 6.525074481964111 | Test acc: 0.5166667103767395

Epoch [3400/50000]| Loss: 7.385967182926834e-05 | Test loss: 6.588977336883545 | Test acc: 0.5166667103767395

Epoch [3600/50000]| Loss: 6.250566366361454e-05 | Test loss: 6.650025844573975 | Test acc: 0.5333333611488342

Epoch [3800/50000]| Loss: 5.3246665629558265e-05 | Test loss: 6.7106032371521 | Test acc: 0.550000011920929

Epoch [4000/50000]| Loss: 4.558221553452313e-05 | Test loss: 6.76936674118042 | Test acc: 0.550000011920929

Epoch [4200/50000]| Loss: 3.918914808309637e-05 | Test loss: 6.827228546142578 | Test acc: 0.5666667222976685

Epoch [4400/50000]| Loss: 3.38368809025269e-05 | Test loss: 6.884305000305176 | Test acc: 0.5666667222976685

Epoch [4600/50000]| Loss: 2.931252311100252e-05 | Test loss: 6.940467834472656 | Test acc: 0.5666667222976685

Epoch [4800/50000]| Loss: 2.5463274141657166e-05 | Test loss: 6.996277332305908 | Test acc: 0.5666667222976685

Epoch [5000/50000]| Loss: 2.218194458691869e-05 | Test loss: 7.051356315612793 | Test acc: 0.5666667222976685

Epoch [5200/50000]| Loss: 1.9369299479876645e-05 | Test loss: 7.105686664581299 | Test acc: 0.5666667222976685

Epoch [5400/50000]| Loss: 1.6950303688645363e-05 | Test loss: 7.158790588378906 | Test acc: 0.5666667222976685

Epoch [5600/50000]| Loss: 1.4863081560179126e-05 | Test loss: 7.211725234985352 | Test acc: 0.5833333730697632

Epoch [5800/50000]| Loss: 1.3052418580628e-05 | Test loss: 7.263161659240723 | Test acc: 0.5833333730697632

Epoch [6000/50000]| Loss: 1.1482287845865358e-05 | Test loss: 7.314208507537842 | Test acc: 0.5833333730697632

Epoch [6200/50000]| Loss: 1.0115331861015875e-05 | Test loss: 7.365146160125732 | Test acc: 0.5833333730697632

Epoch [6400/50000]| Loss: 8.919068932300434e-06 | Test loss: 7.414725303649902 | Test acc: 0.5833333730697632

Epoch [6600/50000]| Loss: 7.875146366131958e-06 | Test loss: 7.464669227600098 | Test acc: 0.5833333730697632

Epoch [6800/50000]| Loss: 6.960884547879687e-06 | Test loss: 7.514991283416748 | Test acc: 0.6000000238418579

Epoch [7000/50000]| Loss: 6.156908057164401e-06 | Test loss: 7.565361022949219 | Test acc: 0.6166666746139526

Epoch [7200/50000]| Loss: 5.4499905672855675e-06 | Test loss: 7.614068984985352 | Test acc: 0.6333333849906921

Epoch [7400/50000]| Loss: 4.82959194414434e-06 | Test loss: 7.664480686187744 | Test acc: 0.6166666746139526

Epoch [7600/50000]| Loss: 4.280992470739875e-06 | Test loss: 7.7132673263549805 | Test acc: 0.6166666746139526

Epoch [7800/50000]| Loss: 3.7980782963131787e-06 | Test loss: 7.761932373046875 | Test acc: 0.6333333849906921

Epoch [8000/50000]| Loss: 3.371706725374679e-06 | Test loss: 7.807484149932861 | Test acc: 0.6500000357627869

Epoch [8200/50000]| Loss: 2.9959151106595527e-06 | Test loss: 7.856073379516602 | Test acc: 0.6333333849906921

Epoch [8400/50000]| Loss: 2.6601787794788834e-06 | Test loss: 7.90520715713501 | Test acc: 0.6333333849906921

Epoch [8600/50000]| Loss: 2.3655645691178506e-06 | Test loss: 7.950022220611572 | Test acc: 0.6333333849906921

Epoch [8800/50000]| Loss: 2.102280859617167e-06 | Test loss: 7.9993181228637695 | Test acc: 0.6500000357627869

Epoch [9000/50000]| Loss: 1.8738107883109478e-06 | Test loss: 8.043482780456543 | Test acc: 0.6500000357627869

Epoch [9200/50000]| Loss: 1.6648674545649556e-06 | Test loss: 8.095328330993652 | Test acc: 0.6500000357627869

Epoch [9400/50000]| Loss: 1.477601927035721e-06 | Test loss: 8.141080856323242 | Test acc: 0.6500000357627869

Epoch [9600/50000]| Loss: 1.3110344525557593e-06 | Test loss: 8.187095642089844 | Test acc: 0.6500000357627869

Epoch [9800/50000]| Loss: 1.1655265552690253e-06 | Test loss: 8.232380867004395 | Test acc: 0.6500000357627869

Epoch [10000/50000]| Loss: 1.0369010396971134e-06 | Test loss: 8.27680778503418 | Test acc: 0.6500000357627869

Epoch [10200/50000]| Loss: 9.236156301994924e-07 | Test loss: 8.332098960876465 | Test acc: 0.6500000357627869

Epoch [10400/50000]| Loss: 8.209046882257098e-07 | Test loss: 8.377657890319824 | Test acc: 0.6500000357627869

Epoch [10600/50000]| Loss: 7.33658851004293e-07 | Test loss: 8.421455383300781 | Test acc: 0.6500000357627869

Epoch [10800/50000]| Loss: 6.519133535221044e-07 | Test loss: 8.463788032531738 | Test acc: 0.6500000357627869

Epoch [11000/50000]| Loss: 5.813909069729561e-07 | Test loss: 8.506776809692383 | Test acc: 0.6500000357627869

Epoch [11200/50000]| Loss: 5.179429649615486e-07 | Test loss: 8.546900749206543 | Test acc: 0.6500000357627869

Epoch [11400/50000]| Loss: 4.6397275355047896e-07 | Test loss: 8.5875825881958 | Test acc: 0.6500000357627869

Epoch [11600/50000]| Loss: 4.130965294280031e-07 | Test loss: 8.630277633666992 | Test acc: 0.6666666865348816

Epoch [11800/50000]| Loss: 3.703084132666845e-07 | Test loss: 8.670719146728516 | Test acc: 0.6666666865348816

Epoch [12000/50000]| Loss: 3.303875928395428e-07 | Test loss: 8.712809562683105 | Test acc: 0.6500000357627869

Epoch [12200/50000]| Loss: 2.949266502128012e-07 | Test loss: 8.756936073303223 | Test acc: 0.6500000357627869

Epoch [12400/50000]| Loss: 2.621892463139375e-07 | Test loss: 10.198891639709473 | Test acc: 0.6500000357627869

Epoch [12600/50000]| Loss: 2.3383447000924207e-07 | Test loss: 10.24311351776123 | Test acc: 0.6500000357627869

Epoch [12800/50000]| Loss: 2.0893862995308154e-07 | Test loss: 10.281050682067871 | Test acc: 0.6500000357627869

Epoch [13000/50000]| Loss: 1.873517874173558e-07 | Test loss: 10.324292182922363 | Test acc: 0.6500000357627869

Epoch [13200/50000]| Loss: 1.6544075265301217e-07 | Test loss: 10.36108112335205 | Test acc: 0.6500000357627869

Epoch [13400/50000]| Loss: 1.4810707682499924e-07 | Test loss: 10.405681610107422 | Test acc: 0.6500000357627869

Epoch [13600/50000]| Loss: 1.3415395017091214e-07 | Test loss: 10.441610336303711 | Test acc: 0.6500000357627869

Epoch [13800/50000]| Loss: 1.194849232888373e-07 | Test loss: 10.478506088256836 | Test acc: 0.6500000357627869

Epoch [14000/50000]| Loss: 1.0681625894903846e-07 | Test loss: 10.520624160766602 | Test acc: 0.6500000357627869

Epoch [14200/50000]| Loss: 9.6615181632842e-08 | Test loss: 10.558903694152832 | Test acc: 0.6500000357627869

Epoch [14400/50000]| Loss: 8.45647107894365e-08 | Test loss: 10.60090446472168 | Test acc: 0.6500000357627869

Epoch [14600/50000]| Loss: 7.376637967126953e-08 | Test loss: 10.640356063842773 | Test acc: 0.6500000357627869

Epoch [14800/50000]| Loss: 6.542452979374502e-08 | Test loss: 10.680272102355957 | Test acc: 0.6500000357627869

Epoch [15000/50000]| Loss: 5.9484275283239185e-08 | Test loss: 10.72153091430664 | Test acc: 0.6833333969116211

Epoch [15200/50000]| Loss: 5.493606991535671e-08 | Test loss: 10.761346817016602 | Test acc: 0.6833333969116211

Epoch [15400/50000]| Loss: 4.724689972590568e-08 | Test loss: 12.203948020935059 | Test acc: 0.6833333969116211

Epoch [15600/50000]| Loss: 4.2314542980648184e-08 | Test loss: 12.244400024414062 | Test acc: 0.6666666865348816

Epoch [15800/50000]| Loss: 3.8845140437615555e-08 | Test loss: 12.281681060791016 | Test acc: 0.6666666865348816

Epoch [16000/50000]| Loss: 3.707356910354065e-08 | Test loss: 12.311822891235352 | Test acc: 0.6666666865348816

Epoch [16200/50000]| Loss: 3.325295949707652e-08 | Test loss: 12.343223571777344 | Test acc: 0.6666666865348816

Epoch [16400/50000]| Loss: 2.6252967799678117e-08 | Test loss: 12.382522583007812 | Test acc: 0.6666666865348816

Epoch [16600/50000]| Loss: 2.538564380927255e-08 | Test loss: 12.422088623046875 | Test acc: 0.6666666865348816

Epoch [16800/50000]| Loss: 2.1016896667447327e-08 | Test loss: 12.45760726928711 | Test acc: 0.6666666865348816

Epoch [17000/50000]| Loss: 2.1481977086068582e-08 | Test loss: 12.493753433227539 | Test acc: 0.6666666865348816

Epoch [17200/50000]| Loss: 1.6907060640392046e-08 | Test loss: 12.53577709197998 | Test acc: 0.6666666865348816

Epoch [17400/50000]| Loss: 1.5718711665613228e-08 | Test loss: 12.568955421447754 | Test acc: 0.6666666865348816

Epoch [17600/50000]| Loss: 1.3882702987189077e-08 | Test loss: 12.612834930419922 | Test acc: 0.6666666865348816

Epoch [17800/50000]| Loss: 1.1096873642202354e-08 | Test loss: 12.648948669433594 | Test acc: 0.6666666865348816

Epoch [18000/50000]| Loss: 9.352933538764319e-09 | Test loss: 12.689348220825195 | Test acc: 0.6666666865348816

Epoch [18200/50000]| Loss: 1.0003638806210802e-08 | Test loss: 12.731362342834473 | Test acc: 0.6666666865348816

Epoch [18400/50000]| Loss: 7.273904589766289e-09 | Test loss: 12.771377563476562 | Test acc: 0.6666666865348816

Epoch [18600/50000]| Loss: 1.1152800460934031e-08 | Test loss: 12.801715850830078 | Test acc: 0.6666666865348816

Epoch [18800/50000]| Loss: 6.716116995875154e-09 | Test loss: 12.83680534362793 | Test acc: 0.6666666865348816

Epoch [19000/50000]| Loss: 5.878935560588161e-09 | Test loss: 12.870599746704102 | Test acc: 0.6666666865348816

Epoch [19200/50000]| Loss: 4.6171555467822145e-09 | Test loss: 12.902836799621582 | Test acc: 0.6666666865348816

Epoch [19400/50000]| Loss: 3.766854828057831e-09 | Test loss: 12.934759140014648 | Test acc: 0.6666666865348816

Epoch [19600/50000]| Loss: 5.4646971392458e-09 | Test loss: 12.968114852905273 | Test acc: 0.6666666865348816

Epoch [19800/50000]| Loss: 4.6358774596910735e-09 | Test loss: 13.001200675964355 | Test acc: 0.6500000357627869

Epoch [20000/50000]| Loss: 2.8739075563777305e-09 | Test loss: 13.03464126586914 | Test acc: 0.6500000357627869

Epoch [20200/50000]| Loss: 2.1490684787295322e-09 | Test loss: 13.06644344329834 | Test acc: 0.6500000357627869

Epoch [20400/50000]| Loss: 1.4572341111573905e-09 | Test loss: 13.098254203796387 | Test acc: 0.6500000357627869

Epoch [20600/50000]| Loss: 1.2991251407967752e-09 | Test loss: 13.127968788146973 | Test acc: 0.6500000357627869

Epoch [20800/50000]| Loss: 2.6529269891995e-09 | Test loss: 13.159762382507324 | Test acc: 0.6500000357627869

Epoch [21000/50000]| Loss: 2.030047685508407e-09 | Test loss: 13.189566612243652 | Test acc: 0.6500000357627869

Epoch [21200/50000]| Loss: 9.264308764578288e-10 | Test loss: 13.221129417419434 | Test acc: 0.6500000357627869

Epoch [21400/50000]| Loss: 2.8248745564951605e-09 | Test loss: 13.248001098632812 | Test acc: 0.6500000357627869

Epoch [21600/50000]| Loss: 1.7544116159839973e-09 | Test loss: 13.278014183044434 | Test acc: 0.6500000357627869

Epoch [21800/50000]| Loss: 1.6735752783603175e-09 | Test loss: 13.307363510131836 | Test acc: 0.6500000357627869

Epoch [22000/50000]| Loss: 1.1116674247801939e-09 | Test loss: 13.33376693725586 | Test acc: 0.6500000357627869

Epoch [22200/50000]| Loss: 5.569941174954351e-10 | Test loss: 13.36406135559082 | Test acc: 0.6500000357627869

Epoch [22400/50000]| Loss: 2.4947095500493788e-09 | Test loss: 13.392501831054688 | Test acc: 0.6500000357627869

Epoch [22600/50000]| Loss: 4.629246985743407e-10 | Test loss: 13.416236877441406 | Test acc: 0.6500000357627869

Epoch [22800/50000]| Loss: 4.1576542209043055e-10 | Test loss: 13.446188926696777 | Test acc: 0.6500000357627869

Epoch [23000/50000]| Loss: 3.8393216383880713e-10 | Test loss: 13.470492362976074 | Test acc: 0.6500000357627869

Epoch [23200/50000]| Loss: 3.483631161316225e-10 | Test loss: 13.496055603027344 | Test acc: 0.6500000357627869

Epoch [23400/50000]| Loss: 3.1816854706434583e-10 | Test loss: 13.529071807861328 | Test acc: 0.6500000357627869

Epoch [23600/50000]| Loss: 2.9729874118089583e-10 | Test loss: 13.559581756591797 | Test acc: 0.6500000357627869

Epoch [23800/50000]| Loss: 2.6871846414699974e-10 | Test loss: 13.587803840637207 | Test acc: 0.6500000357627869

Epoch [24000/50000]| Loss: 2.4421797917284493e-10 | Test loss: 13.60767650604248 | Test acc: 0.6500000357627869

Epoch [24200/50000]| Loss: 2.250165609396504e-10 | Test loss: 13.632790565490723 | Test acc: 0.6500000357627869

Epoch [24400/50000]| Loss: 2.0837126468720157e-10 | Test loss: 13.65329647064209 | Test acc: 0.6500000357627869

Epoch [24600/50000]| Loss: 1.9219711933082806e-10 | Test loss: 13.678715705871582 | Test acc: 0.6500000357627869

Epoch [24800/50000]| Loss: 6.767422733311435e-10 | Test loss: 13.703224182128906 | Test acc: 0.6500000357627869

Epoch [25000/50000]| Loss: 1.6487537723985923e-10 | Test loss: 13.727160453796387 | Test acc: 0.6500000357627869

Epoch [25200/50000]| Loss: 1.5267642705651951e-10 | Test loss: 13.758060455322266 | Test acc: 0.6500000357627869

Epoch [25400/50000]| Loss: 1.40359543432389e-10 | Test loss: 13.778833389282227 | Test acc: 0.6500000357627869

Epoch [25600/50000]| Loss: 6.273347397112161e-10 | Test loss: 13.797171592712402 | Test acc: 0.6500000357627869

Epoch [25800/50000]| Loss: 1.1954953693660286e-10 | Test loss: 13.824437141418457 | Test acc: 0.6500000357627869

Epoch [26000/50000]| Loss: 6.095864368838022e-10 | Test loss: 13.842463493347168 | Test acc: 0.6500000357627869

Epoch [26200/50000]| Loss: 1.0380130088805117e-10 | Test loss: 13.858780860900879 | Test acc: 0.6333333849906921

Epoch [26400/50000]| Loss: 9.6996931320259e-11 | Test loss: 13.882829666137695 | Test acc: 0.6333333849906921

Epoch [26600/50000]| Loss: 9.452862798076112e-11 | Test loss: 13.892241477966309 | Test acc: 0.6333333849906921

Epoch [26800/50000]| Loss: 8.48386985663474e-11 | Test loss: 13.92798900604248 | Test acc: 0.6166666746139526

Epoch [27000/50000]| Loss: 7.971395765693501e-11 | Test loss: 13.94847297668457 | Test acc: 0.6166666746139526

Epoch [27200/50000]| Loss: 5.703703620518752e-10 | Test loss: 13.974750518798828 | Test acc: 0.6166666746139526

Epoch [27400/50000]| Loss: 6.940365643304247e-11 | Test loss: 13.981999397277832 | Test acc: 0.6166666746139526

Epoch [27600/50000]| Loss: 6.578225464348719e-11 | Test loss: 14.005589485168457 | Test acc: 0.6166666746139526

Epoch [27800/50000]| Loss: 6.091745857750297e-11 | Test loss: 14.030572891235352 | Test acc: 0.6166666746139526

Epoch [28000/50000]| Loss: 5.76946788954924e-11 | Test loss: 14.052400588989258 | Test acc: 0.6166666746139526

Epoch [28200/50000]| Loss: 5.4179410957644336e-11 | Test loss: 14.067584037780762 | Test acc: 0.6166666746139526

Epoch [28400/50000]| Loss: 5.1510393173082036e-11 | Test loss: 14.088788986206055 | Test acc: 0.6166666746139526

Epoch [28600/50000]| Loss: 4.8111452322086024e-11 | Test loss: 14.102441787719727 | Test acc: 0.6166666746139526

Epoch [28800/50000]| Loss: 4.550021123761461e-11 | Test loss: 14.124162673950195 | Test acc: 0.6166666746139526

Epoch [29000/50000]| Loss: 4.2192270510721386e-11 | Test loss: 14.139208793640137 | Test acc: 0.6166666746139526

Epoch [29200/50000]| Loss: 4.2885712342455307e-11 | Test loss: 14.144719123840332 | Test acc: 0.6166666746139526

Epoch [29400/50000]| Loss: 3.7721766682485836e-11 | Test loss: 14.176400184631348 | Test acc: 0.6166666746139526

Epoch [29600/50000]| Loss: 3.651587712760751e-11 | Test loss: 14.19567584991455 | Test acc: 0.6166666746139526

Epoch [29800/50000]| Loss: 3.3597902238113875e-11 | Test loss: 14.212905883789062 | Test acc: 0.6166666746139526

Epoch [30000/50000]| Loss: 3.079635851888085e-11 | Test loss: 14.239212036132812 | Test acc: 0.6166666746139526

Epoch [30200/50000]| Loss: 3.0378425469601567e-11 | Test loss: 14.248090744018555 | Test acc: 0.6166666746139526

Epoch [30400/50000]| Loss: 2.878879773460241e-11 | Test loss: 14.254645347595215 | Test acc: 0.6166666746139526

Epoch [30600/50000]| Loss: 2.6776034514619518e-11 | Test loss: 14.276941299438477 | Test acc: 0.6166666746139526

Epoch [30800/50000]| Loss: 2.658804253152791e-11 | Test loss: 14.288410186767578 | Test acc: 0.6166666746139526

Epoch [31000/50000]| Loss: 2.530157160174351e-11 | Test loss: 14.309290885925293 | Test acc: 0.6166666746139526

Epoch [31200/50000]| Loss: 2.274235487431664e-11 | Test loss: 14.328934669494629 | Test acc: 0.6166666746139526

Epoch [31400/50000]| Loss: 2.3321735168058133e-11 | Test loss: 14.330151557922363 | Test acc: 0.6166666746139526

Epoch [31600/50000]| Loss: 2.1507240433038533e-11 | Test loss: 14.355047225952148 | Test acc: 0.6166666746139526

Epoch [31800/50000]| Loss: 2.0329311151146e-11 | Test loss: 14.370945930480957 | Test acc: 0.6166666746139526

Epoch [32000/50000]| Loss: 2.0570413428178114e-11 | Test loss: 14.371373176574707 | Test acc: 0.6166666746139526

Epoch [32200/50000]| Loss: 1.8536627094389857e-11 | Test loss: 14.39869499206543 | Test acc: 0.6166666746139526

Epoch [32400/50000]| Loss: 1.9017639893426086e-11 | Test loss: 14.39334774017334 | Test acc: 0.6166666746139526

Epoch [32600/50000]| Loss: 1.7967004620200733e-11 | Test loss: 14.40893268585205 | Test acc: 0.6166666746139526

Epoch [32800/50000]| Loss: 1.6404180097628895e-11 | Test loss: 14.432926177978516 | Test acc: 0.6166666746139526

Epoch [33000/50000]| Loss: 1.6545672817946944e-11 | Test loss: 14.435171127319336 | Test acc: 0.6166666746139526

Epoch [33200/50000]| Loss: 1.5968811342692568e-11 | Test loss: 14.449573516845703 | Test acc: 0.6166666746139526

Epoch [33400/50000]| Loss: 1.482420609877355e-11 | Test loss: 14.46760368347168 | Test acc: 0.6166666746139526

Epoch [33600/50000]| Loss: 1.4650383337033723e-11 | Test loss: 14.470980644226074 | Test acc: 0.6166666746139526

Epoch [33800/50000]| Loss: 1.5175685361135116e-11 | Test loss: 14.48271656036377 | Test acc: 0.6166666746139526

Epoch [34000/50000]| Loss: 1.4240623437411504e-11 | Test loss: 14.484646797180176 | Test acc: 0.6166666746139526

Epoch [34200/50000]| Loss: 1.4454127131302563e-11 | Test loss: 14.490129470825195 | Test acc: 0.6166666746139526

Epoch [34400/50000]| Loss: 1.3421638800359403e-11 | Test loss: 14.506799697875977 | Test acc: 0.6166666746139526

Epoch [34600/50000]| Loss: 1.3027520034958329e-11 | Test loss: 14.515089988708496 | Test acc: 0.6166666746139526

Epoch [34800/50000]| Loss: 1.2783799192839229e-11 | Test loss: 14.525429725646973 | Test acc: 0.6166666746139526

Epoch [35000/50000]| Loss: 1.2091705241246142e-11 | Test loss: 14.537230491638184 | Test acc: 0.6166666746139526

Epoch [35200/50000]| Loss: 1.1682515198285781e-11 | Test loss: 14.538467407226562 | Test acc: 0.6166666746139526

Epoch [35400/50000]| Loss: 1.1189028002733803e-11 | Test loss: 14.549182891845703 | Test acc: 0.6166666746139526

Epoch [35600/50000]| Loss: 1.1145312971139187e-11 | Test loss: 14.557954788208008 | Test acc: 0.6166666746139526

Epoch [35800/50000]| Loss: 1.1052328323379879e-11 | Test loss: 14.56375789642334 | Test acc: 0.6166666746139526

Epoch [36000/50000]| Loss: 1.0184407137070473e-11 | Test loss: 14.579349517822266 | Test acc: 0.6166666746139526

Epoch [36200/50000]| Loss: 1.0722483664848959e-11 | Test loss: 14.583467483520508 | Test acc: 0.6166666746139526

Epoch [36400/50000]| Loss: 9.516934115771924e-12 | Test loss: 14.602983474731445 | Test acc: 0.6166666746139526

Epoch [36600/50000]| Loss: 9.514989490755354e-12 | Test loss: 14.607243537902832 | Test acc: 0.6166666746139526

Epoch [36800/50000]| Loss: 9.836281095187971e-12 | Test loss: 14.608552932739258 | Test acc: 0.6166666746139526

Epoch [37000/50000]| Loss: 9.072338366666877e-12 | Test loss: 14.620014190673828 | Test acc: 0.6166666746139526

Epoch [37200/50000]| Loss: 9.073542264759205e-12 | Test loss: 14.63068675994873 | Test acc: 0.6166666746139526

Epoch [37400/50000]| Loss: 8.775107376846059e-12 | Test loss: 14.645861625671387 | Test acc: 0.6166666746139526

Epoch [37600/50000]| Loss: 8.471316530200834e-12 | Test loss: 14.644025802612305 | Test acc: 0.6166666746139526

Epoch [37800/50000]| Loss: 8.242349511244917e-12 | Test loss: 14.648316383361816 | Test acc: 0.6166666746139526

Epoch [38000/50000]| Loss: 7.927465107970821e-12 | Test loss: 14.665948867797852 | Test acc: 0.6166666746139526

Epoch [38200/50000]| Loss: 8.475518897821388e-12 | Test loss: 14.65312385559082 | Test acc: 0.6166666746139526

Epoch [38400/50000]| Loss: 7.916296090870745e-12 | Test loss: 14.674219131469727 | Test acc: 0.6166666746139526

Epoch [38600/50000]| Loss: 8.22284601520451e-12 | Test loss: 14.667810440063477 | Test acc: 0.6166666746139526

Epoch [38800/50000]| Loss: 7.453120585976247e-12 | Test loss: 14.687384605407715 | Test acc: 0.6166666746139526

Epoch [39000/50000]| Loss: 7.788972591904475e-12 | Test loss: 14.687569618225098 | Test acc: 0.6166666746139526

Epoch [39200/50000]| Loss: 7.733242865515244e-12 | Test loss: 14.696063995361328 | Test acc: 0.6166666746139526

Epoch [39400/50000]| Loss: 7.388064986180165e-12 | Test loss: 14.706647872924805 | Test acc: 0.6166666746139526

Epoch [39600/50000]| Loss: 7.528566312031693e-12 | Test loss: 14.707212448120117 | Test acc: 0.6166666746139526

Epoch [39800/50000]| Loss: 6.803449296988173e-12 | Test loss: 14.723294258117676 | Test acc: 0.6166666746139526

Epoch [40000/50000]| Loss: 6.78350431382313e-12 | Test loss: 14.730762481689453 | Test acc: 0.6166666746139526

Epoch [40200/50000]| Loss: 6.679983389351607e-12 | Test loss: 14.7279634475708 | Test acc: 0.6166666746139526

Epoch [40400/50000]| Loss: 6.7067250190955274e-12 | Test loss: 14.722419738769531 | Test acc: 0.6166666746139526

Epoch [40600/50000]| Loss: 6.73161179576276e-12 | Test loss: 14.717020034790039 | Test acc: 0.6166666746139526

Epoch [40800/50000]| Loss: 6.943880800219793e-12 | Test loss: 14.719932556152344 | Test acc: 0.6166666746139526

Epoch [41000/50000]| Loss: 6.741227367990099e-12 | Test loss: 14.724199295043945 | Test acc: 0.6166666746139526

Epoch [41200/50000]| Loss: 7.161274177824861e-12 | Test loss: 14.729557991027832 | Test acc: 0.6166666746139526

Epoch [41400/50000]| Loss: 6.3571270643436595e-12 | Test loss: 14.745817184448242 | Test acc: 0.6166666746139526

Epoch [41600/50000]| Loss: 5.8956342412208596e-12 | Test loss: 14.759477615356445 | Test acc: 0.6166666746139526

Epoch [41800/50000]| Loss: 5.993733287468217e-12 | Test loss: 14.757428169250488 | Test acc: 0.6166666746139526

Epoch [42000/50000]| Loss: 6.158135199690884e-12 | Test loss: 14.758402824401855 | Test acc: 0.6166666746139526

Epoch [42200/50000]| Loss: 5.981246314207267e-12 | Test loss: 14.769401550292969 | Test acc: 0.6166666746139526

Epoch [42400/50000]| Loss: 5.481477684948777e-12 | Test loss: 14.784106254577637 | Test acc: 0.6166666746139526

Epoch [42600/50000]| Loss: 5.849652359724011e-12 | Test loss: 14.784066200256348 | Test acc: 0.6166666746139526

Epoch [42800/50000]| Loss: 6.1604076874444136e-12 | Test loss: 14.780256271362305 | Test acc: 0.6166666746139526

Epoch [43000/50000]| Loss: 5.526344572931441e-12 | Test loss: 14.791254997253418 | Test acc: 0.6166666746139526

Epoch [43200/50000]| Loss: 5.733287976317225e-12 | Test loss: 14.782875061035156 | Test acc: 0.6166666746139526

Epoch [43400/50000]| Loss: 5.485886918343841e-12 | Test loss: 14.788421630859375 | Test acc: 0.6166666746139526

Epoch [43600/50000]| Loss: 5.6523262632890425e-12 | Test loss: 14.796037673950195 | Test acc: 0.6166666746139526

Epoch [43800/50000]| Loss: 5.811907812652839e-12 | Test loss: 14.788078308105469 | Test acc: 0.6166666746139526

Epoch [44000/50000]| Loss: 4.95633265332196e-12 | Test loss: 14.807668685913086 | Test acc: 0.6166666746139526

Epoch [44200/50000]| Loss: 4.975399866408159e-12 | Test loss: 14.810047149658203 | Test acc: 0.6166666746139526

Epoch [44400/50000]| Loss: 5.009650680398714e-12 | Test loss: 14.81877326965332 | Test acc: 0.6166666746139526

Epoch [44600/50000]| Loss: 4.907942108278718e-12 | Test loss: 14.819985389709473 | Test acc: 0.6166666746139526

Epoch [44800/50000]| Loss: 5.003439502992979e-12 | Test loss: 14.816807746887207 | Test acc: 0.6166666746139526

Epoch [45000/50000]| Loss: 5.285770952878632e-12 | Test loss: 14.823657035827637 | Test acc: 0.6166666746139526

Epoch [45200/50000]| Loss: 4.816436745963548e-12 | Test loss: 14.825916290283203 | Test acc: 0.6166666746139526

Epoch [45400/50000]| Loss: 4.973284371129205e-12 | Test loss: 14.836710929870605 | Test acc: 0.6166666746139526

Epoch [45600/50000]| Loss: 4.754599058215403e-12 | Test loss: 14.84179973602295 | Test acc: 0.6166666746139526

Epoch [45800/50000]| Loss: 4.6662313769874064e-12 | Test loss: 14.849921226501465 | Test acc: 0.6166666746139526

Epoch [46000/50000]| Loss: 5.011198053739285e-12 | Test loss: 14.842015266418457 | Test acc: 0.6166666746139526

Epoch [46200/50000]| Loss: 4.428276322021585e-12 | Test loss: 14.859390258789062 | Test acc: 0.6166666746139526

Epoch [46400/50000]| Loss: 4.680031535919671e-12 | Test loss: 14.854981422424316 | Test acc: 0.6166666746139526

Epoch [46600/50000]| Loss: 4.597318185861621e-12 | Test loss: 14.85905647277832 | Test acc: 0.6166666746139526

Epoch [46800/50000]| Loss: 4.3400474186716664e-12 | Test loss: 14.872050285339355 | Test acc: 0.6166666746139526

Epoch [47000/50000]| Loss: 4.264405235182567e-12 | Test loss: 14.87592887878418 | Test acc: 0.6166666746139526

Epoch [47200/50000]| Loss: 4.353486321440059e-12 | Test loss: 14.880329132080078 | Test acc: 0.6166666746139526

Epoch [47400/50000]| Loss: 4.377165296887142e-12 | Test loss: 14.883623123168945 | Test acc: 0.6166666746139526

Epoch [47600/50000]| Loss: 3.939179711587304e-12 | Test loss: 14.898781776428223 | Test acc: 0.6166666746139526

Epoch [47800/50000]| Loss: 4.543088127917372e-12 | Test loss: 14.880878448486328 | Test acc: 0.6166666746139526

Epoch [48000/50000]| Loss: 3.989512279561902e-12 | Test loss: 14.897659301757812 | Test acc: 0.6166666746139526

Epoch [48200/50000]| Loss: 4.2632338631554134e-12 | Test loss: 14.896625518798828 | Test acc: 0.6166666746139526

Epoch [48400/50000]| Loss: 3.8679311489819845e-12 | Test loss: 14.911261558532715 | Test acc: 0.6166666746139526

Epoch [48600/50000]| Loss: 4.095920217578319e-12 | Test loss: 14.900321960449219 | Test acc: 0.6166666746139526

Epoch [48800/50000]| Loss: 3.901126817418277e-12 | Test loss: 14.90514087677002 | Test acc: 0.6166666746139526

Epoch [49000/50000]| Loss: 4.170296920291694e-12 | Test loss: 14.908403396606445 | Test acc: 0.6166666746139526

Epoch [49200/50000]| Loss: 3.6842287033656e-12 | Test loss: 14.92368221282959 | Test acc: 0.6166666746139526

Epoch [49400/50000]| Loss: 4.1042867889029555e-12 | Test loss: 14.908949851989746 | Test acc: 0.6166666746139526

Epoch [49600/50000]| Loss: 3.7399562613504855e-12 | Test loss: 14.923355102539062 | Test acc: 0.6166666746139526

Epoch [49800/50000]| Loss: 3.972038843669257e-12 | Test loss: 14.908845901489258 | Test acc: 0.6166666746139526

Epoch [50000/50000]| Loss: 4.208068355576744e-12 | Test loss: 14.90880012512207 | Test acc: 0.6166666746139526

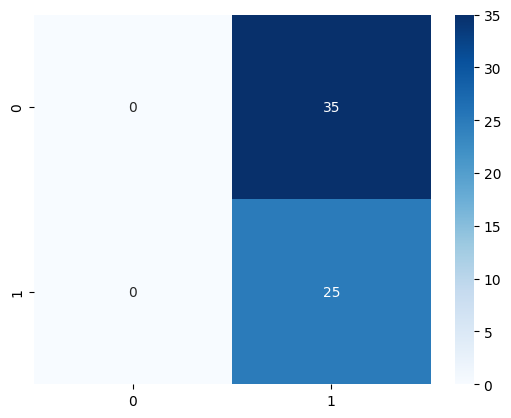

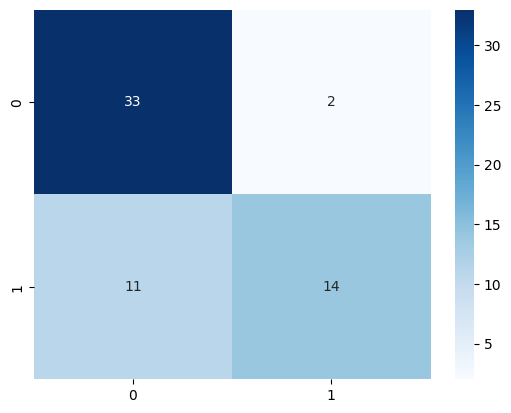

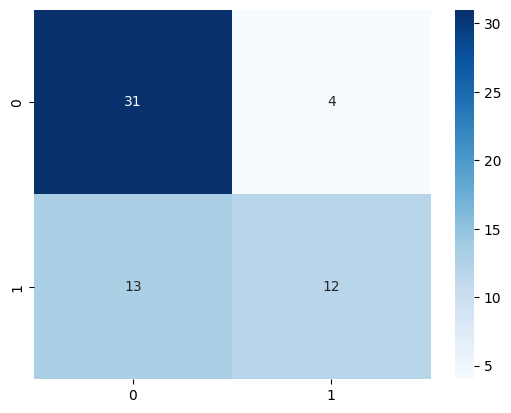

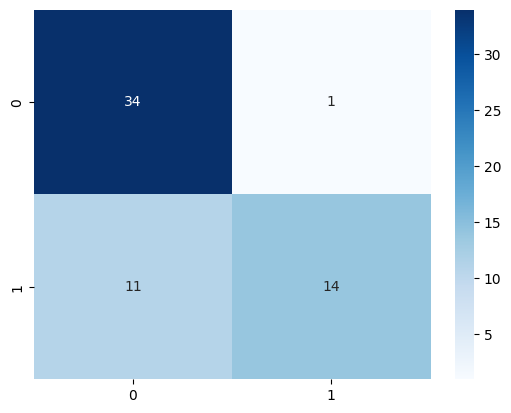

show_conf_mat(model_3,X_t,y_t)

#some testing code to test the dim of matrices of the training loop

model_3.eval()

with torch.no_grad():

y_pred = model_3(X_t)

y_pred

tensor([[[1.1749e-21]],

[[3.8824e-23]],

[[1.7553e-15]],

[[1.0000e+00]],

[[1.7950e-16]],

[[2.8648e-15]],

[[1.0000e+00]],

[[1.0000e+00]],

[[1.0000e+00]],

[[1.5038e-12]],

[[6.2817e-15]],

[[6.8943e-19]],

[[1.5233e-15]],

[[5.1181e-15]],

[[5.5409e-13]],

[[1.3733e-23]],

[[1.5237e-14]],

[[1.7051e-07]],

[[2.7596e-20]],

[[5.4328e-13]],

[[1.0000e+00]],

[[1.0000e+00]],

[[1.2664e-03]],

[[3.8322e-12]],

[[9.9992e-01]],

[[3.6692e-22]],

[[5.3668e-19]],

[[1.3105e-14]],

[[1.2365e-14]],

[[5.1813e-24]],

[[2.9832e-09]],

[[1.7931e-22]],

[[1.0000e+00]],

[[1.0000e+00]],

[[1.3017e-07]],

[[1.5817e-04]],

[[1.6261e-15]],

[[1.3738e-05]],

[[2.8454e-11]],

[[1.8070e-14]],

[[1.3996e-10]],

[[1.0000e+00]],

[[2.1376e-05]],

[[9.9893e-01]],

[[1.0000e+00]],

[[7.9655e-16]],

[[1.0000e+00]],

[[1.6864e-18]],

[[7.7240e-21]],

[[1.5570e-18]],

[[1.0000e+00]],

[[4.6589e-15]],

[[5.7829e-09]],

[[1.6513e-25]],

[[1.5362e-22]],

[[2.0423e-14]],

[[2.8846e-15]],

[[9.9998e-01]],

[[1.7517e-17]],

[[1.0000e+00]]], device='cuda:0')

y_t.shape, y_pred.shape

(torch.Size([60, 1]), torch.Size([60, 1, 1]))

# although the training loss went to around 0, the testing acc is not great...

# I think the model is overfiting the entrie time

# the highest test acc I got was 73%

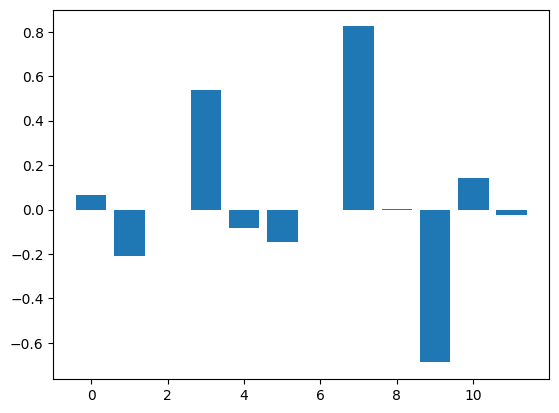

model_4=nn.Sequential(

nn.Linear(

in_features=inp_size,out_features=inp_size

),

nn.ReLU(),

nn.Linear(

in_features=inp_size,out_features=1

)

).to(device)

loss_fn=nn.BCEWithLogitsLoss()#sigmoid build in

opt=torch.optim.SGD(params=model_4.parameters(),lr=0.001)

# Training loop

num_epochs = 50000

for epoch in range(num_epochs):

model_4.train()

opt.zero_grad()

outputs = model_4(X_l)

#print(y_l.shape,outputs.shape)

loss = loss_fn(outputs, y_l)

loss.backward()

opt.step()

if (epoch+1) % 200 == 0:

model_4.eval()

with torch.inference_mode():

y_pred=model_4(X_t).squeeze(1)

t_loss=loss_fn(y_pred,y_t)

t_acc=(torch.round(torch.sigmoid(y_pred))==y_t).sum()/len(y_t)

print(f'Epoch [{epoch+1}/{num_epochs}]| Loss: {loss.item()} | Test loss: {t_loss} | Test acc: {t_acc}')

Epoch [200/50000]| Loss: 0.7396892309188843 | Test loss: 0.7253536581993103 | Test acc: 0.40000003576278687

Epoch [400/50000]| Loss: 0.7162843942642212 | Test loss: 0.7126798629760742 | Test acc: 0.4833333492279053

Epoch [600/50000]| Loss: 0.6965829730033875 | Test loss: 0.7027833461761475 | Test acc: 0.5333333611488342

Epoch [800/50000]| Loss: 0.679774284362793 | Test loss: 0.6949777603149414 | Test acc: 0.5333333611488342

Epoch [1000/50000]| Loss: 0.6652429699897766 | Test loss: 0.6887955069541931 | Test acc: 0.6000000238418579

Epoch [1200/50000]| Loss: 0.6525279879570007 | Test loss: 0.6838710904121399 | Test acc: 0.6000000238418579

Epoch [1400/50000]| Loss: 0.6412969827651978 | Test loss: 0.6798798441886902 | Test acc: 0.5833333730697632

Epoch [1600/50000]| Loss: 0.6312581300735474 | Test loss: 0.6766141653060913 | Test acc: 0.6000000238418579

Epoch [1800/50000]| Loss: 0.6222255825996399 | Test loss: 0.6739140152931213 | Test acc: 0.6000000238418579

Epoch [2000/50000]| Loss: 0.6140072345733643 | Test loss: 0.6715956926345825 | Test acc: 0.6000000238418579

Epoch [2200/50000]| Loss: 0.6063694953918457 | Test loss: 0.6695378422737122 | Test acc: 0.6000000238418579

Epoch [2400/50000]| Loss: 0.5992622375488281 | Test loss: 0.6677345633506775 | Test acc: 0.6000000238418579

Epoch [2600/50000]| Loss: 0.5926132202148438 | Test loss: 0.6660757064819336 | Test acc: 0.6000000238418579

Epoch [2800/50000]| Loss: 0.5863750576972961 | Test loss: 0.6646950244903564 | Test acc: 0.6000000238418579

Epoch [3000/50000]| Loss: 0.580334484577179 | Test loss: 0.6633870601654053 | Test acc: 0.6000000238418579

Epoch [3200/50000]| Loss: 0.5745308995246887 | Test loss: 0.6620970964431763 | Test acc: 0.6000000238418579

Epoch [3400/50000]| Loss: 0.5688847899436951 | Test loss: 0.6607946157455444 | Test acc: 0.6000000238418579

Epoch [3600/50000]| Loss: 0.5633763670921326 | Test loss: 0.6594444513320923 | Test acc: 0.5833333730697632

Epoch [3800/50000]| Loss: 0.5580199956893921 | Test loss: 0.6580381393432617 | Test acc: 0.5833333730697632

Epoch [4000/50000]| Loss: 0.5528206825256348 | Test loss: 0.6566060781478882 | Test acc: 0.5833333730697632

Epoch [4200/50000]| Loss: 0.547629177570343 | Test loss: 0.6550620198249817 | Test acc: 0.5833333730697632

Epoch [4400/50000]| Loss: 0.5424522757530212 | Test loss: 0.6534416675567627 | Test acc: 0.5833333730697632

Epoch [4600/50000]| Loss: 0.537298321723938 | Test loss: 0.6517847776412964 | Test acc: 0.5833333730697632

Epoch [4800/50000]| Loss: 0.532189667224884 | Test loss: 0.6500828862190247 | Test acc: 0.5833333730697632

Epoch [5000/50000]| Loss: 0.5271359086036682 | Test loss: 0.6482961177825928 | Test acc: 0.5833333730697632

Epoch [5200/50000]| Loss: 0.522089958190918 | Test loss: 0.6464494466781616 | Test acc: 0.6000000238418579

Epoch [5400/50000]| Loss: 0.5170531272888184 | Test loss: 0.6445052623748779 | Test acc: 0.6000000238418579

Epoch [5600/50000]| Loss: 0.511976957321167 | Test loss: 0.6424915790557861 | Test acc: 0.6000000238418579

Epoch [5800/50000]| Loss: 0.5069401264190674 | Test loss: 0.6403533220291138 | Test acc: 0.6000000238418579

Epoch [6000/50000]| Loss: 0.5018759369850159 | Test loss: 0.6381059288978577 | Test acc: 0.6000000238418579

Epoch [6200/50000]| Loss: 0.4967987835407257 | Test loss: 0.6357623338699341 | Test acc: 0.6000000238418579

Epoch [6400/50000]| Loss: 0.49185827374458313 | Test loss: 0.6334653496742249 | Test acc: 0.6000000238418579

Epoch [6600/50000]| Loss: 0.48705562949180603 | Test loss: 0.6311294436454773 | Test acc: 0.6000000238418579

Epoch [6800/50000]| Loss: 0.48233547806739807 | Test loss: 0.6287215352058411 | Test acc: 0.6000000238418579

Epoch [7000/50000]| Loss: 0.47760576009750366 | Test loss: 0.6262781023979187 | Test acc: 0.6000000238418579

Epoch [7200/50000]| Loss: 0.4728202521800995 | Test loss: 0.623813271522522 | Test acc: 0.6000000238418579

Epoch [7400/50000]| Loss: 0.4680238962173462 | Test loss: 0.6213484406471252 | Test acc: 0.6000000238418579

Epoch [7600/50000]| Loss: 0.4633120596408844 | Test loss: 0.618866503238678 | Test acc: 0.6333333849906921

Epoch [7800/50000]| Loss: 0.45870599150657654 | Test loss: 0.6163574457168579 | Test acc: 0.6333333849906921

Epoch [8000/50000]| Loss: 0.4542057514190674 | Test loss: 0.6138903498649597 | Test acc: 0.6500000357627869

Epoch [8200/50000]| Loss: 0.44982218742370605 | Test loss: 0.611524224281311 | Test acc: 0.6666666865348816

Epoch [8400/50000]| Loss: 0.44546642899513245 | Test loss: 0.6091405749320984 | Test acc: 0.6666666865348816

Epoch [8600/50000]| Loss: 0.44112178683280945 | Test loss: 0.6068019270896912 | Test acc: 0.6666666865348816

Epoch [8800/50000]| Loss: 0.4368794858455658 | Test loss: 0.6045339107513428 | Test acc: 0.6666666865348816

Epoch [9000/50000]| Loss: 0.43266358971595764 | Test loss: 0.602261483669281 | Test acc: 0.6833333969116211

Epoch [9200/50000]| Loss: 0.42849457263946533 | Test loss: 0.6000149250030518 | Test acc: 0.6833333969116211

Epoch [9400/50000]| Loss: 0.4243742525577545 | Test loss: 0.5978257656097412 | Test acc: 0.6833333969116211

Epoch [9600/50000]| Loss: 0.42029932141304016 | Test loss: 0.5956773161888123 | Test acc: 0.6833333969116211

Epoch [9800/50000]| Loss: 0.4162881374359131 | Test loss: 0.5935574769973755 | Test acc: 0.6833333969116211

Epoch [10000/50000]| Loss: 0.41235095262527466 | Test loss: 0.5914856195449829 | Test acc: 0.6833333969116211

Epoch [10200/50000]| Loss: 0.40855205059051514 | Test loss: 0.5895212888717651 | Test acc: 0.6833333969116211

Epoch [10400/50000]| Loss: 0.4048292636871338 | Test loss: 0.5875906944274902 | Test acc: 0.6833333969116211

Epoch [10600/50000]| Loss: 0.4011887311935425 | Test loss: 0.585712730884552 | Test acc: 0.6833333969116211

Epoch [10800/50000]| Loss: 0.3976222276687622 | Test loss: 0.5838722586631775 | Test acc: 0.7000000476837158

Epoch [11000/50000]| Loss: 0.39411455392837524 | Test loss: 0.5821669101715088 | Test acc: 0.7000000476837158

Epoch [11200/50000]| Loss: 0.39068570733070374 | Test loss: 0.5805732607841492 | Test acc: 0.7000000476837158

Epoch [11400/50000]| Loss: 0.3873482942581177 | Test loss: 0.5789878368377686 | Test acc: 0.7166666984558105

Epoch [11600/50000]| Loss: 0.38405707478523254 | Test loss: 0.5774484276771545 | Test acc: 0.7166666984558105

Epoch [11800/50000]| Loss: 0.38082075119018555 | Test loss: 0.5759648680686951 | Test acc: 0.7166666984558105

Epoch [12000/50000]| Loss: 0.3776426315307617 | Test loss: 0.57452791929245 | Test acc: 0.7166666984558105

Epoch [12200/50000]| Loss: 0.37449702620506287 | Test loss: 0.5731417536735535 | Test acc: 0.7333333492279053

Epoch [12400/50000]| Loss: 0.37141790986061096 | Test loss: 0.5718206167221069 | Test acc: 0.7333333492279053

Epoch [12600/50000]| Loss: 0.36840781569480896 | Test loss: 0.5705723166465759 | Test acc: 0.7333333492279053

Epoch [12800/50000]| Loss: 0.36548343300819397 | Test loss: 0.5693975687026978 | Test acc: 0.7333333492279053

Epoch [13000/50000]| Loss: 0.36264023184776306 | Test loss: 0.5682846307754517 | Test acc: 0.7500000596046448

Epoch [13200/50000]| Loss: 0.35987451672554016 | Test loss: 0.567166268825531 | Test acc: 0.7500000596046448

Epoch [13400/50000]| Loss: 0.3572196066379547 | Test loss: 0.5660492181777954 | Test acc: 0.7500000596046448

Epoch [13600/50000]| Loss: 0.35462772846221924 | Test loss: 0.5649657249450684 | Test acc: 0.7500000596046448

Epoch [13800/50000]| Loss: 0.35210058093070984 | Test loss: 0.5639618039131165 | Test acc: 0.7500000596046448

Epoch [14000/50000]| Loss: 0.3496253788471222 | Test loss: 0.5629955530166626 | Test acc: 0.7500000596046448

Epoch [14200/50000]| Loss: 0.3472071588039398 | Test loss: 0.5620896816253662 | Test acc: 0.7333333492279053

Epoch [14400/50000]| Loss: 0.34484198689460754 | Test loss: 0.5612649321556091 | Test acc: 0.7333333492279053

Epoch [14600/50000]| Loss: 0.3425419330596924 | Test loss: 0.5604904294013977 | Test acc: 0.7333333492279053

Epoch [14800/50000]| Loss: 0.34030306339263916 | Test loss: 0.5597413182258606 | Test acc: 0.7333333492279053