Feature Scaling#

Why do we need to scale the features?#

For ordinary least squares (OLS) regression, the scale of the features does not matter. However, for some other machine learning method that we will introduce later in this class, the magnitude of the features can have a significant impact on the model.

Many machine learning algorithm require some notion of “similarity” or “distance” between data points in high-dimensional space. For example, one simple method for prediction is to find the data points that are “closest” to the new data point, and then use the target value of those data points to predict the target value of the new data point.

If we consider the Euclidean distance between two data points, the distance between two points \(x\) and \(y\) in \(d\)-dimensional space is

where \(x_i\) and \(y_i\) are the \(i\)-th feature/coordinate of the two data points. If the features are on different scales, then the distance will be dominated by the features with the largest scale.

Even for linear regression, scaling might help with the interpretation of the coefficients. After standardization, all features are measured in standard deviations, so each coefficient represents the expected change in the target variable for a one standard deviation increase in the feature. This makes it possible to compare the magnitudes of the coefficients, as they’re all in the same units (standard deviations of the features).

Let \(X\) be the feature vector (one column of the design matrix) and \(X'\) to be the scaled feature vector.

Here some scaling methods:

Min-max scaling: scales the data to be in the range [0, 1]

Standardization (z-score scaling): scales the data to have mean 0 and standard deviation 1

where \(\bar{X}\) is the sample mean of \(X\) and \(\sigma_X\) is the sample standard deviation of \(X\).

These are linear transformations of the data. Sometimes we also want to transform the data non-linearly. For example, we might want to take the logarithm of the data if the data spans several orders of magnitude.

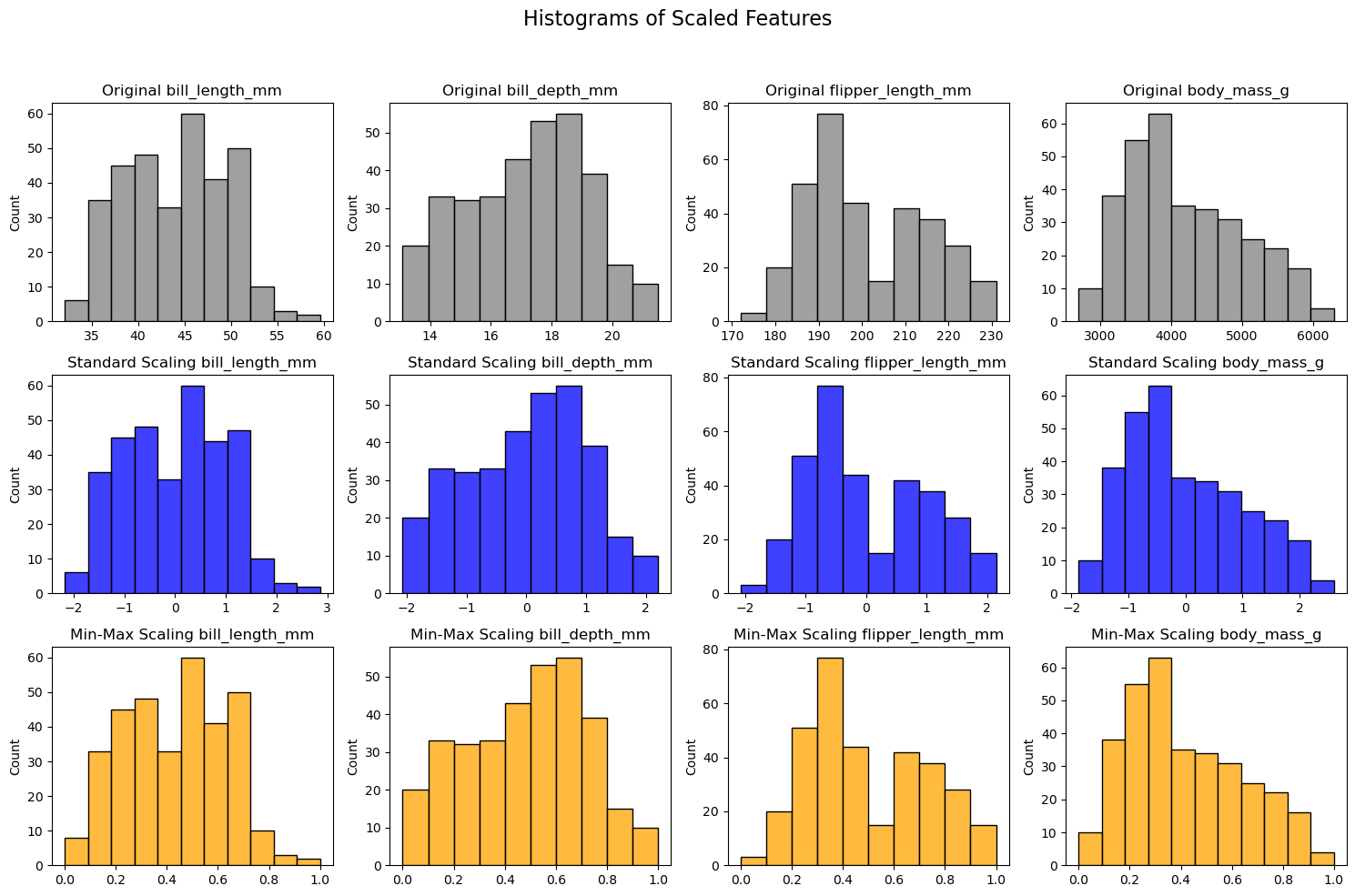

The following code visualize the histogram of the numerical features in the penguins dataset. Notice that these linear transformations do not change the shape of the distribution of the data. The only change is the x-axis.

If we use standardization, the unit of the data is “x standard deviations from the mean”. If we use min-max scaling, the unit of the data is “x percent of the range of the data”.

import seaborn as sns

import matplotlib.pyplot as plt

from sklearn.preprocessing import StandardScaler, MinMaxScaler

import pandas as pd

# Load the Penguins dataset

df = sns.load_dataset('penguins')

df.dropna(inplace=True) # Remove missing values

# Selecting numerical features

numerical_features = ['bill_length_mm', 'bill_depth_mm', 'flipper_length_mm', 'body_mass_g']

# Creating scalers

scalers = {

'Standard Scaling': StandardScaler(),

'Min-Max Scaling': MinMaxScaler()

}

colors = ['gray', 'blue', 'orange']

# Plotting the histograms

fig, axes = plt.subplots(len(scalers) + 1, len(numerical_features), figsize=(15, 10))

fig.suptitle('Histograms of Scaled Features', fontsize=16)

# Original data histogram

for i, feature in enumerate(numerical_features):

sns.histplot(df[numerical_features[i]], ax=axes[0, i], color=colors[0])

axes[0, i].set_title(f'Original {feature}')

axes[0, i].set_xlabel('')

# Scaled data histograms

for row, (name, scaler) in enumerate(scalers.items(), start=1):

# Fit and transform the data

scaled_data = scaler.fit_transform(df[numerical_features])

scaled_df = pd.DataFrame(scaled_data, columns=numerical_features)

for i, feature in enumerate(scaled_df.columns):

sns.histplot(scaled_df[feature], ax=axes[row, i], color=colors[row])

axes[row, i].set_title(f'{name} {feature}')

axes[row, i].set_xlabel('')

plt.tight_layout(rect=[0, 0, 1, 0.95]) # Adjust subplots to fit the title

plt.show()

import seaborn as sns

import matplotlib.pyplot as plt

from sklearn.preprocessing import StandardScaler, MinMaxScaler

import pandas as pd

from sklearn.linear_model import LinearRegression

df = sns.load_dataset('penguins')

df.dropna(inplace=True) # Remove missing values

# Selecting numerical features

X = df[['bill_length_mm']]

y = df[['body_mass_g']]

# Fit a linear regression model on the original data

model = LinearRegression()

model.fit(X, y)

print(f"Intercept: {model.intercept_}, slope: {model.coef_}")

# Standardize the data

scaler = StandardScaler()

X_scaled = scaler.fit_transform(X)

y_scaled = scaler.fit_transform(y)

# Fit a linear regression model on the scaled data

model.fit(X_scaled, y_scaled)

print(f"Scaled intercept: {model.intercept_}, slope: {model.coef_}")

Intercept: [388.84515876], slope: [[86.79175965]]

Scaled intercept: [-3.97095663e-16], slope: [[0.58945111]]

Why is the intercept 0?

In the single variable case, the optimal intercept is given by

After standardization, \(\bar{X} = 0\) and \(\bar{Y} = 0\), so the optimal intercept is 0. The same is true for multiple linear regression.