import matplotlib.pyplot as plt

import numpy as np

from sklearn.linear_model import LinearRegression, LogisticRegression

def sigmoid(z):

return 1 / (1 + np.exp(-z))

# Set seed for reproducibility

np.random.seed(0)

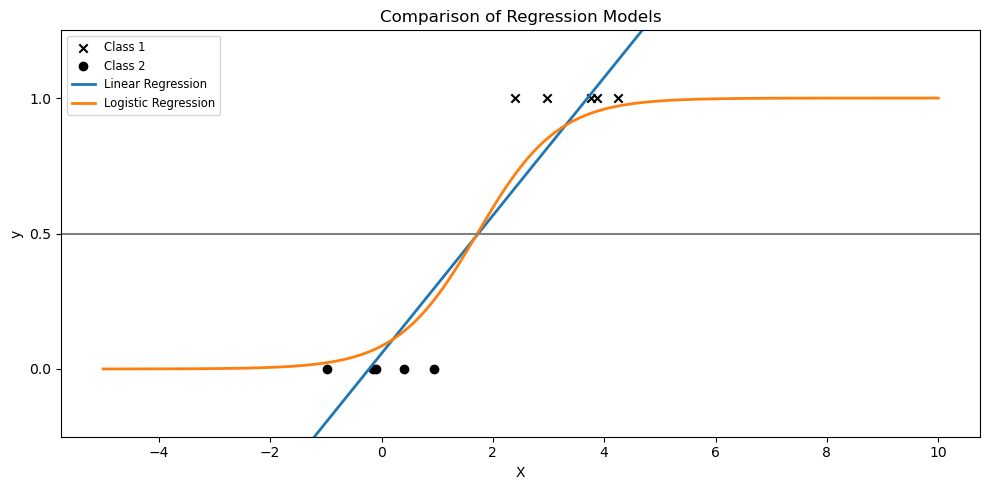

# Generate a toy dataset

N = 5

x_class1 = np.random.normal(2, 1, N)

x_class2 = np.random.normal(0, 1, N)

y_class1 = np.ones(N)

y_class2 = np.zeros(N)

# Features and labels, with and without outlier

X = np.concatenate((x_class1, x_class2)).reshape(-1, 1)

y = np.concatenate((y_class1, y_class2))

# Fit linear regression

ols = LinearRegression()

ols.fit(X, y)

# Fit logistic regression

clf = LogisticRegression()

clf.fit(X, y)

# Generate test data for plotting

X_test = np.linspace(-5, 10, 300).reshape(-1, 1)

y_test = ols.predict(X_test)

logistic_prob = sigmoid(clf.coef_ * X_test + clf.intercept_)

# Plotting

plt.figure(figsize=(10, 5))

# plot data

plt.scatter(x_class1, y_class1, label='Class 1', marker='x', color='k')

plt.scatter(x_class2, y_class2, label='Class 2', marker='o', color='k')

# Without extra points

plt.plot(X_test, y_test, label="Linear Regression", linewidth=2)

plt.plot(X_test, logistic_prob, label="Logistic Regression", linewidth=2)

plt.title("Comparison of Regression Models")

plt.axhline(0.5, color=".5")

plt.ylabel("y")

plt.xlabel("X")

plt.yticks([0, 0.5, 1])

plt.ylim(-0.25, 1.25)

plt.legend(loc="upper left", fontsize="small")

plt.tight_layout()

plt.show()